<center>

# Typescript Validators Jamboree

<big>

**A Fair(ly cruel) Review of Typescript Validation Libraries**

</big>

*Written by Igor Loskutov. Originally published 2024-08-21 on the [Monadical blog](https://monadical.com/blog.html).*

</center>

## Motivation

Lots of validator libraries exist and pop up regularly in the Typescript world.

The fact that there are so many has to mean something.

Let’s examine some of them pragmatically and find out ourselves.

What’s a validator? I use this terminology rather frivolously here, as sometimes this kind of feature is called “parsers” or “codecs.” “Parsers” doesn’t sit well with me because it also means [a wider CS concept](https://en.wikipedia.org/wiki/Parsing) which could cause confusion. “Codecs” is a more accurate word, but not all the libraries in this review have the “codec” semantics. But remember, kids: [parse, don’t validate!](https://lexi-lambda.github.io/blog/2019/11/05/parse-don-t-validate/) Most libraries here are actually “parsers,” but they bring a narrower set of features.

> The data format that all of those libraries validate is ultimately JSON-compatible dictionaries. That is dictated by how Javascript interacts with the outside world: whether you get a JSON string or a protobuf binary, at some stage, it usually ends up as an untyped dictionary with primitives or more dictionaries as values.

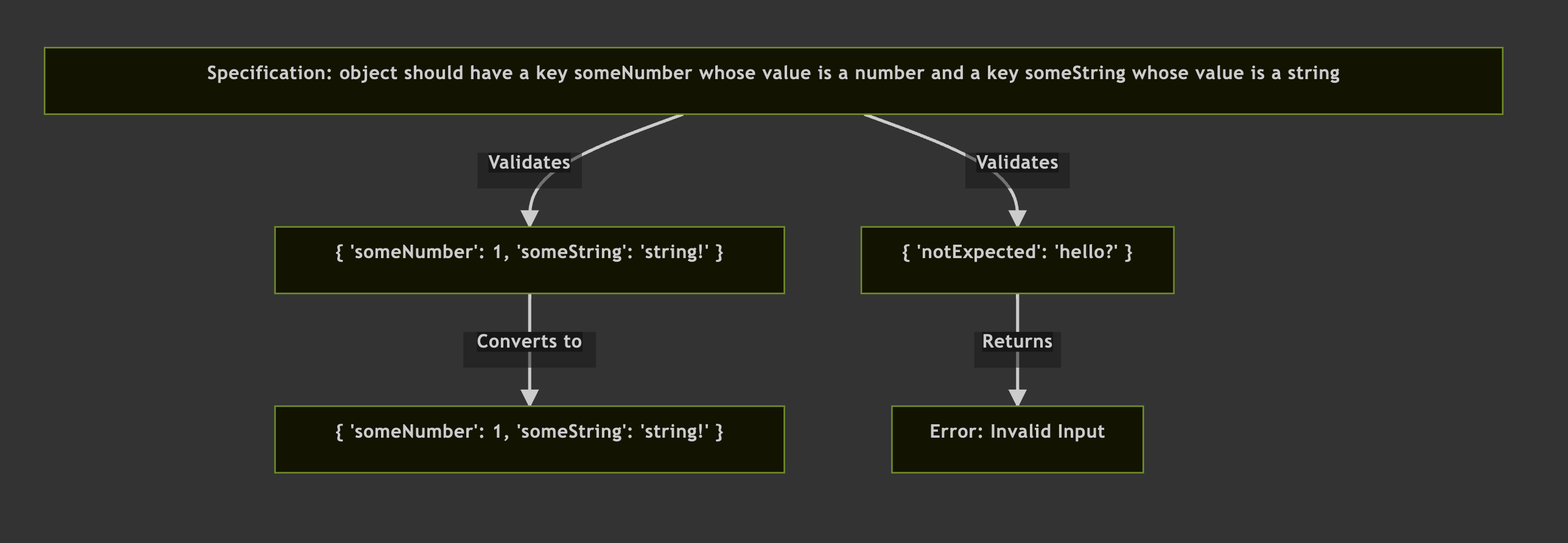

So, for this review, the validator is something that takes a piece of data that’s supposed to be specifically structured and checks that it indeed conforms to such a structure, carrying this information further into your codebase:

```mermaid

graph TD

A[Specification: object should have a key someNumber whose value is a number and a key someString whose value is a string]

B["{ 'someNumber': 1, 'someString': 'string!' }"]

C["{ 'someNumber': 1, 'someString': 'string!' }"]

D["{ 'notExpected': 'hello?' }"]

E[Error: Invalid Input]

A -->|Validates| B

B -->|Converts to| C

A -->|Validates| D

D -->|Returns| E

```

## Disclaimer

Let’s get this out of the way: I assume that most of the codebases out there need to interact with unreliable data sources, such as HTTP API inputs or sometimes even database reads. All of such interactions benefit from having a structure and validation of this structure.

I also assume that most, if not all, of those codebases, benefit from a transparent and well-defined domain model encoded in a type system if such a system is present in the stack that you use.

Here, I focus only on the Typescript ecosystem. That also means it falls out of scope if the lib doesn’t support the type system well enough despite “running” with Typescript code.

Another thing that’s out of scope is runtime and compile-time performance. It’s a tricky beast that deserves its research. One of the best performance comparisons out there is [https://moltar.github.io/typescript-runtime-type-benchmarks/](https://moltar.github.io/typescript-runtime-type-benchmarks/).

In this review, I assume we all agree that catching errors earlier is better than seeing them later. This disclaimer will come in handy later when I discuss criteria such as [tagged types](https://ybogomolov.me/primitives-were-a-mistake).

## Criteria

Among the other criteria, **expressiveness **will dominate the evaluation process. That means a special focus of this review is how well the validator libraries leverage the Typescript type system to solidify the gap between runtime “chaos” and compile-time “order.”

> It also means that being able to “transform” outside world types into domain/language types is expected.

>

> The most obvious example is when, through JSON, we receive an array of items that are supposed to be unique, e.g., the user’s favourite colours: we don’t tolerate when the user has favourited “pink” three times! In Javascript, this assumption naturally translates into the Set data structure.

**Composability** is another critical focus. I’d like to reuse already defined validators and combine them freely like LEGO blocks. Who wouldn’t? Real life dictates that sometimes (and eventually happens on most codebases), your data becomes fragmented, be it [partial API results](https://docs.stripe.com/expand) or multiple sources of truth.

Besides that, I’ll pay some attention to the **easy-to-use** aspect, which could mean whether the API looks reasonable (from my perspective, although I’ll try to be objective) or whether it’s easy to set up in your project and maintainable.

With that said, there’s not going to be any point system or winners/losers, just an enumeration of what worked and what didn’t, together with my personal opinion, which is bound to be subjective sometimes.

Therefore, this review “features” mostly feature comparisons, leading me to the main criterion measurement, **the test case**.

### The test case

It’s not an actual use case but a combination of use cases I’ve encountered. The purpose is to check as many features as possible with a reasonably compact data structure.

* User ID - it’s a string, but is it? Should I be able to pass it to a function that expects another domain-specific ID, such as a blog ID? I’d like my code not to allow making this mistake.

* User Name - is it allowed to be an empty string? Should it be of a type “string,” then?

* Email validation is quite tricky—some [would say impossible](https://www.netmeister.org/blog/email.html)—but I’d like to check whether libs 1) can do arbitrary regex validation and 2) realize that it isn’t a straightforward task. So, I’m going to consider the absence of built-in email validation a benefit and, on the contrary, **the presence of such a validation a detriment**.

* createdAt/updatedAt - first, this checks how the libs recognize support [ISO 8601](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Date/toISOString) date parsing. They don’t have to have it battery-included; it must be simple to implement. It could’ve been the [UNIX timestamp](https://en.wikipedia.org/wiki/Unix_time) instead; it doesn’t matter.[^1] Secondly, can the lib represent such a date as a Date object in my code? Believe it or not, not every library could do this. Last, can the lib perform “bird’s view” checks comparing the values of multiple fields? After all, the phrase “User has been created **after** it was modified” doesn’t make much sense and often indicates temporal ordering errors in the system.

* Subscription type - just a check that a lib can do enums.

* Stripe Id - a check of whether the lib recognized the existence of [template literals](https://www.typescriptlang.org/docs/handbook/2/template-literal-types.html) in Typescript. Typescript supports time literals, so for the library’s feature completeness, I expect it to have them, too.

* Visits - it’s probably far from how I’d store this in a real model, but this check is for if the lib can do integers. It is also important to me whether it’s possible to represent the “uncanny valley” between Typescript compile time and runtime, representing non-negative numbers.

* Favourite Colours is a check whether the lib 1) recognizes the existence of unique arrays, 2) can do Sets (and, consequently, any other arbitrary custom transformation), 3) can represent a union of an enum and a regex-checked string such as hex colour value.

* Profile - a check of whether the lib recognizes the existence of [union types](https://www.typescriptlang.org/docs/handbook/unions-and-intersections.html). Union types are one of Typescript’s most powerful data design features. I can’t imagine a codebase that won’t benefit from them. Here, profiles can be of two distinct types, each with its particular set of fields.

* File System is probably the most “imaginary” field in the User object! It checks whether the lib supports recursive data structures (called “lazy” in many libs because of implementation details) and is spiced up with unions.

You’ll see that some of my testing data structure fields check multiple features. This is done to test the **composability** of such features, too.

### Validity checks hierarchy

This is probably a good time to talk about types. I like types and would love to see them everywhere. Sorry, Lisp bros. I like the types that articulate domain design well.

I also like [domain-driven design](https://martinfowler.com/bliki/DomainDrivenDesign.html), so here we go.

Briefly, a type can be strongly knitted between compile time and runtime. You see this, e.g., in a String. If I want a String in my code, I get it both while typing and running it. A String is always a String. There’s nothing to add here.

The same is true for a Number. If I want to count my users’ [money](https://www.youtube.com/watch?v=m6ID-dv2f34), I just use a Number. It’s beautiful. I can multiply and divide it, and my users’ money accumulates floating-point errors. I can also multiply it by itself. My users’ dollars become Dollars Squared: exactly what they need!

> Taken from an old [Reddit thread](https://www.reddit.com/r/softwaregore/comments/baz7x8/square_dollars/)

Wait a second, Igor. Have you gone mad? Dollar Squared? Well, check your codebase. If it’s possible to multiply your users’ money by themselves, your compiler certainly isn’t used in full. Which is okay, but can we still do better?

But in reality, it’s not all black and white. Some types are not easily presentable by the compiler. The closest example is Number in Typescript vs. the plethora of Integer, Float, UInt, etc., in better languages. Some type systems can cater to “I want this number only to be natural”; some can’t. Some can say, “I want this number to be 0 or more,” but some can’t.

Typescript community plugs this gap with [newtypes](https://wiki.haskell.org/Newtype)[^2] and [branded](https://prosopo.io/articles/typescript-branding/) types. I’ll skip newtypes here and further; despite being a stricter and sometimes much more helpful abstraction, its DevEx [has been terrible](https://monadical.com/posts/property-based-testing-for-temporal-graph-storage.html) so far, and we’re pragmatic after all, so I’ll ignore their existence.

Nominal types, or Branded types, though, help us close this gap of “I want this to be an integer [without going into BigInt],” “I want this number to be non-negative,” and “I want User ID not to be assignable to Blog ID.” These types are effectively strings runtime, but during compilation, they hint that they’re not, and sometimes that’s what we need.

They exist in this weird limbo between compile and runtime (validation-time) checks.

Now, there’s a third, both weakest as a guarantee and strongest in the sense of “can do it all” kind of checks - runtime-only checks. When you see a type `{createdAt, updatedAt}`, you can assume createdAt is less or equal to updatedAt, but nobody will check it for you unless you do it yourself and do it runtime[^3].

Hopefully, these 3 “levels” of strictness—**full compile-time**, **“pretended” compile-time/nominal types** and **runtime YOLO**—explain why I couldn’t just shut up about branded types and include them in the comparison. If I can make my types stricter without sacrificing DevEx, I see no reason not to do so.

### Libraries Selection Process

I’ll review all of the libraries.

Except those that don’t fit the criteria of:

* Lively: have a reasonable amount of fresh commits.

* Popular: have GitHub stars, talked about.

* Typescript support: not just “run” but support enough of the type system. Sorry, runtime js validators.

I may or may not include some of my favourite libs worthy of discussion. For example, a lib that focuses on JSON schema standard, parsing type definitions as an extra build step, and parsing string literals right in Typescript type system to extract type definitions.

## Extra factors and context

### Typescript type system shenanigans

So, more often than not, we engineers say, “Don’t repeat yourself,” we engineers say, “Don’t repeat yourself.” We repeat that over and over. Repeating (or rather, the absence of such) is one of the cornerstones of validator libraries’ design.

Indeed, do you want to write your validation logic and then write types for it? Can I just write `object({ n: integer() }` and not duplicate it in types as `type O = {n: Integer}`?

All the libraries that passed the criteria for this review support this convenience, with some inessential exceptions.

> I’m convicted that defining types one extra time isn’t a big deal, though, as long as the type system doesn’t allow for lies like `object({ n: integer() }; type O = {s: string}`. You’ll see that in some cases, we’ll have to write extra type definitions, notably for recursive structures, to help Typescript infer and not fall into infinite recursion.

This property is called “inference.” They “infer” types from runtime validation code. What’s important to emphasize, though, is that Typescript works only one way in this regard.

By default, Typescript won’t infer any runtime code from compile-time code unless you add extra build steps that do so in an arcane[^4] way. Instead, it organically understands the type of this or that runtime value if you define your types properly, which the library authors do.

That’s how `object({ {n: integer() })` automatically becomes a type `{n: Integer}` without any extra effort from the user.

It helps a lot when writing your code; after defining the validator, you can infer a type from it and reuse it across your app, most notably as function argument types. That’s what validators ultimately are about – checking runtime external values and telling your functions they’re safe and ready to be worked with.

For better visualization, I’ll repurpose a well-known [ports and adapters architecture](https://en.wikipedia.org/wiki/Hexagonal_architecture_(software)) diagram[^5]:

So, the magic of inference happens mostly without your involvement. The important thing is that your business logic gets nice, sanitized data.

### Parsers and codecs dichotomy

What if I told you that you can handle not only your input data but the output data as well?

We tidy our tables before and after dining in a communal dining area[^6]. Why won’t we tidy the data that leaves our apps?

It comes especially handy with Javascript: JS tools often use JSON.stringify your values before sending them over the network. That could cause a lot of trouble with types like Set:

```

JSON.stringify(new Set(['1', '2'])); // -> ‘{}’

```

The problem here isn’t rather validation but proper conversion into serializable values.

The libraries that can do that have the “codecs” semantics, meaning they “decode” the input data and can “encode” the “business logic” objects back into a serializable format.

If the library can declaratively perform both encoding and decoding, e.g., `Array -> Set -> Array`, I’m going to “grade” it higher (sorry, Zod and other one-way parsers, but that doesn’t apply to you, although we can technically define two isomorphic transformations there, it won’t be a core feature but an afterthought).

As a nice side effect, the codec semantics allow for much better composability: a codec A -> B -> C can be piped with a codec C -> D -> E, which can be piped with E -> F -> A, etc.

### Frontend as a 1st-class citizen

The diagrams above may give the impression that validators are usable only for backend APIs, but that’s not the case at all!

It’s Javascript, and we’re going to leverage this fact entirely.

There are a lot of form libraries that have adapters for popular validators (perhaps a topic for another article).

You still have to talk with the APIs and parse their results (do you trust them? Do you always trust your own backend, actually[^7]?).

You may be of an adventurous nature and let your users write into your [Firestore](https://firebase.google.com/docs/firestore), or you may be making a .io game when users talk with each other through webRTC. Or you may want to be more classy and validate forms.

Doesn’t it remind you of the same ports and adapters we’ve seen already?

If that’s not enough, we can also use [monorepos](https://en.wikipedia.org/wiki/Monorepo) to share codecs/parsers code and type information — what a blessing that is!

That said, you see where I’m going. All the libraries in this review have to be able to work on the front end.

Now, that was a long interlude! Unfortunately, it’s over. It's time to get dirty (with unvalidated JSONs) now. I’ll go by alphabet, for not picking any favourites.

## Libraries

Each library will have several code widgets: the editable JSON (try this out) that I use for testing, the source code that does validation/transformations, with comments on API here and there, the view output of the validated object (it’s JS inner representation at this point, not necessary JSON, so some liberties taken: I print “JS object” along with the string representation to show it), and the view output of this object converted back (with encoders, if present) to the initial JSON-serializable type.

## AJV

Bearing a recursive name Ajv JSON [schema] Validator, it’s one of the special cases in this review. They specialize and focus on [JSON Schema](https://json-schema.org/) and [JSON Type Definition](https://jsontypedef.com/) standards support. I’ll use the former here for no reason except that the library’s name literally has “JSON Schema” in it.

The library is mature, tremendously popular, and is well-maintained[^8].

<iframe src="https://parsers-jamboree.apps.loskutoff.com/ajv" height="500" width="100%"></iframe>

You’ll see that it doesn’t cover some test cases that it is supposed to. This is because many typechecks aren’t well-typed, and I’d have to digress to “any” implementing them. This is understandable; with a focus towards JSON Schema definitions, I guess it’s very difficult to translate Typescript types 1x1. I decided to mark them as failed because of the reasons in the introduction: Typescript has to be supported fully, or no deal.

A downside, at least for some, is that it doesn’t infer types. You’ll have to duplicate the type definition and the JSON Schema definition you have. As I mentioned in the introduction, I don’t consider this a big issue, but some may get annoyed. This is probably the only library here that makes you do that.

Another peculiarity of this library is that it mutates its argument on parsing. I had to think about it briefly because it broke my app and corrupted input data for all other validators.

It shouldn’t be an issue in your production code; it’s unlikely that anything can go wrong in actual use cases.

## Arktype

Arktype has a unique concept: it parses [template literals](https://www.typescriptlang.org/docs/handbook/2/template-literal-types.html) parallel in runtime and compile time. Its own template literal syntax mirrors Typescript. These points together allow for very positive DevEx, especially since the library provides its plugins for popular IDEs.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/arktype" height="500" width="100%"></iframe>

Pay attention to how parsers are defined. It’s a true joy (no pun intended, [Joy](https://github.com/hapijs/joi)) to work with it. It feels like magic, a work of art, or both.

It seems to have very good development traction and is in the stage of active development right now. That said, some features that I’m looking for aren’t ready yet.

The lib’s inner workings are even more peculiar because the authors test it by converting types into runtime [https://github.com/arktypeio/arktype/tree/main/ark/attest](https://github.com/arktypeio/arktype/tree/main/ark/attest)

If I were giving prizes, Arktype would’ve taken “the highest hopes” one.

They [recently](https://x.com/arktypeio/status/1816172678748700833) released the beta of 2.0, and I will update their benchmark when it is released. Notably, they will add morphs so I can change my input array to output sets, ISO strings to dates, etc

## Decoders

This library doesn’t compare itself with others or have unique features. The API is neat and has everything you’d expect from a validator library: pre-processors, refinements, transformations. Decoders don’t seem well-composable, though, as I had to redefine some things twice. It also doesn’t seem to have enough Typescript support for discriminated unions[^9], so many important tests fail.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/decoders" height="500" width="100%"></iframe>

## Effect-schema

The creation of [effect.ts](https://effect.website/) developers effect-schema is probably the most feature-complete. I haven’t found any way to break it, and it perfectly handles complex Typescript inference. It’s well-maintained but not well-known yet. I consider it to be [io-ts's](https://github.com/gcanti/io-ts/blob/master/index.md) successor, especially for its very similar API, and for that, the io-ts creator went to work with the effect-ts team. Io-ts has existed for quite a while, so I deem effect-schema mature. It’s well-maintained and updated thanks to effect.ts strong development push.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/effect-schema" height="500" width="100%"></iframe>

It adheres to the “codecs” semantics, being able to convert values back and forth.

## Rescript-schema

A library focused on DevEx and small bundle size. It is the [fastest of them all](https://moltar.github.io/typescript-runtime-type-benchmarks/).

<iframe src="https://parsers-jamboree.apps.loskutoff.com/rescript-schema" height="500" width="100%"></iframe>

It has another superpower that makes it stand out: it’s written in [Rescript](https://rescript-lang.org/) JS dialect and is bundled for Typescript.

## Runtypes

Although not too lively by the time I wrote this, Runtypes still covers many Typescript concepts some other libraries fail to recognize, including template literals, branded types, and unions.

I struggled to find its special power (and there’s no comparison/motivation versus other libs in the readme either) until I discovered that the maintainers’ principal stance is [not to do any transformation](https://github.com/runtypes/runtypes/issues/56). So, an Array always stays Array and never becomes Set and vice versa. It’s a respectable point of view, and I empathize with it, although the reality is that the default expectation from a validator library is to be able to convert types. If we ignore that, though, Runtypes covers a lot of ground feature-wise.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/runtypes" height="500" width="100%"></iframe>

## Schemata-ts

Another slow-maintained but feature-rich library. It features JSON Schema generation. It is positioned as the successor to io-ts. I think it’s a [reaction](https://github.com/fp-tx/core) to the fp-ts and io-ts maintainers leaving for effect.ts. The sentiment is understandable, but I think effect.ts has much more traction because they have much more development resources at this point.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/schemata-ts" height="500" width="100%"></iframe>

Notably, the only failed test is a simple refinement to ensure the array is unique before converting it to a set. Maybe it is my skill issues. With more effort, it could’ve been possible, but I also consider the DevEx aspect here, so it’s one “fail.”

## Superstruct

The purpose of Superstruct, among other things, is to provide detailed errors, make custom types easy, and [introduce more exceptions](https://github.com/ianstormtaylor/superstruct?tab=readme-ov-file#why) into your code.

Superstuct is one of few libraries with a motivation in the readme. “Why” compares itself with several libs except Zod. I find this a bit weird since Zod is the King of validators today.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/superstruct" height="500" width="100%"></iframe>

## Typebox

Typebox is a feature-rich type checker whose superpower is its ability to generate JSON Schema (which makes it compatible with, e.g., Ajv). It gives the impression that the library is very mature and well-tended.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/typebox" height="500" width="100%"></iframe>

A unique feature is that it generates JSON Schema “out-of-the-box” as a core feature. It fails some of my feature tests due to this adherence to JSON Schema, but with all things considered, I probably would’ve chosen it anyway if I had to work with JSON Schema. One thing that seems weird about the API is its many unrelated to the validation task, e.g., “[mutate](https://github.com/sinclairzx81/typebox?tab=readme-ov-file#mutate)” for mutating a nested object.

## Valibot

Another library with a special feature/superpower. This one takes pride in its small bundle size, leverages tree-shaking with a functional API, and declares high performance. Originally a [Bachelor’s thesis](https://valibot.dev/thesis.pdf), it was [endorsed](https://www.builder.io/blog/introducing-valibot) by several famous people, including Miško Hevery, one of the AngularJS creators. It seems to be actively maintained.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/valibot" height="500" width="100%"></iframe>

I enjoyed its API; it was a breeze to use it, although some things were counter-intuitive, for instance, the [default Set combinator](https://github.com/fabian-hiller/valibot/issues/685) that doesn’t take an Array (as I would expect from a library that parses JSON) but instead requires another Set (much more rare case, many codebases may never encounter the need of that). Custom type definition typing was also weak when writing this (see source code above). Overall, I liked it, and I’d take this library if I needed a tiny bundle size. However, the API also seems immature in some places.

## Valita

The library's primary goal is to be as powerful as Zod but with higher performance.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/valita" height="500" width="100%"></iframe>

What stands out is that they took their stance against implementing asynchronous parsing. I support this decision and think that async is not a concern of validation libraries. Although many others include this feature, I think it primarily serves the “marketing” (making the library more popular because it’s a very often requested feature) rather than keeping the library’s feature integrity.

## Yup

One of the most popular validators, likely a part of the popularity, is due to its release long ago and taking the niché early. I wasn’t able to make it validate many cases and keep typing at the same time. Perhaps it fares better for JS projects.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/yup" height="500" width="100%"></iframe>

## Zod

Zod is the Elvis of validators. It dominates this area of Typescript software, and not without a reason. It’s battle-tested, feature-rich, and, importantly, allows wide support in the ecosystem; more often than not, I see other libraries and frameworks that need to outsource validation, referring to Zod as the default choice.

<iframe src="https://parsers-jamboree.apps.loskutoff.com/zod" height="500" width="100%"></iframe>

I did [manage to break](https://github.com/colinhacks/zod/issues/3628) it on the crossing between the recursive structures feature and discriminated union feature, but it doesn’t mean it doesn’t support them separately.

I’d have taken Zod into my codebase if I was looking for the safest choice.

## Test cases elaborated

I’m not going to name any “winners” but encourage you to consider what’s better for your project on your own. Despite which libraries passed more or less of my checks, there’s more context and considerations than just test cases. The features you need, the performance you chase to achieve, the code style of your project, and the needs of the people you're working with are all important factors to consider..

<iframe src="https://parsers-jamboree.apps.loskutoff.com/summary?iframe" height="630px" width="100%"></iframe>

That said, some elaboration on the test cases I used is due. Most of it will repeat the introduction’ “criteria,” but others are extra considerations that don’t just concern the data itself.

Many tests also focus not just on features but also on such features working together. If a library declares a feature, I expect it to work with all other features of this library to a reasonable extent.

**acceptsTypedInput:** A “compile-time” test that shows that the lib can not only work on `unknown`s(/`any`s) but on other types. I find this feature very useful when I want to additionally validate/transform the results of third-party libs, such as [StripeJS](https://www.npmjs.com/package/@stripe/stripe-js). Still, I don’t want to be able to make the mistake of sending an unrelated object to validation.

**addFavouriteRed:** Adds a duplicated valid colour to the favouriteColours field. Although in some cases it's ok, other times I'd like to have no garbage in my database. Having duplicated values in a collection with "set" semantics means that one side of the interaction doesn't know what it's doing, and this is a potential timebomb better to defuse the earliest.

**addFavouriteTiger:** Adds an invalid colour to the favouriteColours field. Enough said.

**addFileSystemDupeFile:** This test adds a duplicated value to the file system tree. My tree has the “unique list” semantics, so that shouldn’t be possible.

**addFileSystemUFOType:** An enum test that is not unlike the Tiger test but in composition with recursive data structures.

**addTwoAtsToEmail:** Well, technically, an Email can have two “at”s, and there’s no good way to check an email anyway, so it’s mostly about checking whether the lib supports custom regex. I purposely try not to use the lib included email checkers; moreover, if the library checks an email but doesn’t warn about its caveats in the docs, I consider it a shortcoming.

**emailFormatAmbiguityIsAccountedFor:** Many libraries warn about email validation caveats in docs, but some include default email validations and don’t warn that it’s im[possible](https://www.reddit.com/r/coding/comments/4d5lqh/it_is_impossible_to_validate_an_email_address/) to [validate an email](https://web.archive.org/web/20160522060130/https://elliot.land/validating-an-email-address). I wasn’t sure whether to include this benchmark as it boundaries with nitpicking, but given many libs really care about it, I decided it’s going to stay.

**clearName:** Checks for very basic refines, e.g., very often, you don’t expect some strings be empty, like the name of the user.

**prefixCustomerId:** This is basically a “regex” case but with a point to explore whether the lib supports template literals. It's not strictly needed, but I keep it here as a sign that template literals can’t be automatically tested runtime. I moved them into another “manual” test case.

**setCreatedAtCyborgWar:** Feeds the validator an invalid date value. I expect only the ISO date format returned by `new Date().toString()`, which JSON.stringify does. There’s a separate argument for why I prefer ISO timestamps rather than Unix timestamps, but it doesn’t matter here: how libraries handle this case matters. Not only battery-included ISO validations are accepted. If it’s easy enough to define a custom validation for an ISO date, I consider it a success, too.

**setHalfVisits:** Sets the “visits” counter to a float value. Who wants 1.5 visits to their service? There’s a vast array of domain values that benefit from being integers. Typescript doesn’t account for them by default, and we need to help it.

**setProfileArtist:** sets a “profile” belonging to a Listener to an Artist. Listeners' fields aren’t Artists’ fields here, so it shouldn’t be possible.

**setSubscriptionTypeBanana:** A fundamental Enum check.

**switchDates:** A tricky check that the lib allows defining refinements involving multiple fields. createdAt being after updatedAt indicates an error down the line that we’d like to catch as early as possible. This type of check, in my opinion, plays along with Typescript the worst of them all. I watch for at least some reasonable type-safety here.

**encodedEqualsInput:** This check stands aside from the others. The library is supposed to define a codec A -> B -> A. This check requires A to equal A in runtime: we don’t want to lose/mutate data. The example is “2024-07-18T21:54:30Z” needs to become a Date, and when it goes back to string, it’s better to be “2024-07-18T21:54:30Z” again.

**templateLiterals:** Typescript supports[ template literals](https://www.typescriptlang.org/docs/handbook/2/template-literal-types.html), so the validator library supporting Typescript is expected to support it too.

**transformationsPossible:** Whether a transform A -> B is supported. Some libraries take a stance of “only validation.” I respect the Unix philosophy approach but always encounter the need to transform eventually. If I get a string “2024-07-18T21:54:30Z”, I don’t want it to stay a string; it’s not entirely useful. In my code base, I want it to be Date (or any [other](https://day.js.org/) [convenient](https://date-fns.org/) [format](https://github.com/moment/luxon)) until it’s ready to be sent over the network again and become “2024-07-18T21:54:30Z”

**branded:** Whether branded types are recognized. This is a hard requirement that the lib has to cater to. I don’t do only primitives anymore. I usually recommend that everyone else consider stricter types “for free” with branding techniques, especially since they go so well with the validator/parser/decoding approach. That said, branded types can be implemented “manually” for any lib that supports transformations.

**typedErrors:** If errors returned by the library are “any” or “unknown,” I don’t consider it to be supporting Typescript. That also means the throw-only semantics will not pass it, even when the “as” casting is in the docs. Also, I think that a validator library, more than anything else, should realize that failed validation is a valid code execution branch to be tended to. Throwing here means using exceptions for control flow, which is a widely recognized no-no.

**canGenerateJsonSchema:** Whether the schema definition can be serialized for cross-system communication. Became more relevant with OpenAI introducing [structured outputs](https://openai.com/index/introducing-structured-outputs-in-the-api/). Structured outputs are the reason I added it as a separate test.

## Enough!

So, this was quite an opinionated review indeed.

Hopefully, I will stand out above many of the “10 best ts validator libraries” articles because I adhered to a transparent methodology for comparing them, which should help you make an educated decision.

Who won your heart is really up to you and up to your project. Does it have rigid type system adepts? Does it require top-notch performance? Are your colleagues already too busy with more important things than JSON validation and would like to go the path of less resistance?

And importantly, what *do you* feel fits and is enjoyable to work with?

Leave a comment about your favourite libs and most looked-for features!

And PR is welcome: https://github.com/dearlordylord/parsers-jamboree

<!-- Footnotes themselves at the bottom. -->

## Notes

[^1]:

Ackchyually, it does matter to me as I regard sending ISO date to be superior as it bumps observability of the system, but it really doesn’t matter in the scope of this review.

[^2]:

https://kubyshkin.name/posts/newtype-in-typescript/

[^3]:

Now, it’s not strictly true and you can try to elevate some of such cases into the “branded” universe, but DevEx going to suffer too much, in my opinion.

[^4]:

Well, maybe it’s not so difficult, I just never looked into it.

[^5]:

Also called “Hexagonal” but I don’t really care about having hexes here.

[^6]:

Unless you’re too rich to be knowing what it is. I can’t help you then (and you don’t probably need any help anyways).

[^7]:

API change may happen when you don’t have versioning, and deploy didn’t fully come through, or cached frontend code didn’t refresh properly, or you improperly JSON.stringify-ed a non-toString()able object. This class of problems shouldn’t ever happen but it still does, and we want to catch it ASAP.

[^8]:

Here and further - “at the moment of writing this”.

[^9]:

https://basarat.gitbook.io/typescript/type-system/discriminated-unions