<center>

# The Scraping-With-Cookies Dilemma

Originally published 2025-03-29 by <a href="https://blog.sweeting.me">Nick Sweeting</a> on <a href="https://blog.monadical.com">blog.monadical.com</a>.

<hr/>

<b style="opacity: 0.8">The internet archiving and scraping industries have a growing problem.</b>

<small>We don't know how to handle cookies 🍪</small>

<!--<img src="https://docs.monadical.com/uploads/upload_7f6377d1e68f48ac9942ee9fd664e798.png" style="width: 100%">-->

</center>

A quick primer first...

---

> #### How Archiving Works

>

>

>

> 📖 You can capture sites as they appear publicly, with no cookies (like Archive.org)

> *or*

> 👤 You can capture sites as a logged-in user (like [WebRecorder](https://webrecorder.net), [ArchiveBox](https://github.com/ArchiveBox/ArchiveBox), etc.)

<br/>

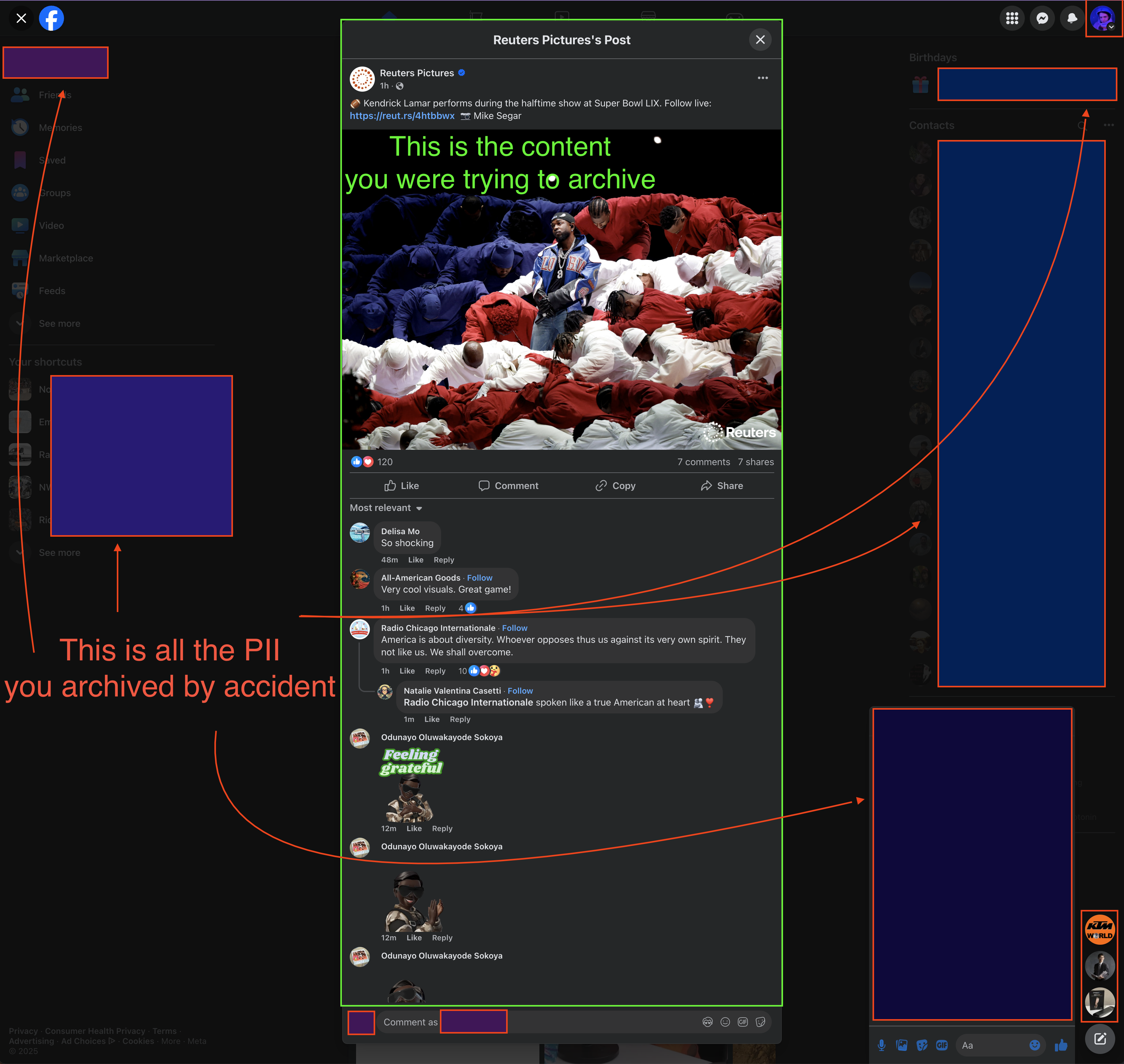

**If you use 🍪 login credentials to save sites, whose do you use?** Not your own!

> If you archive the websites while logged into your normal daily driver accounts, your cookie headers (essentially your password) & other [PII](https://en.wikipedia.org/wiki/Personal_data) / account details in the HTML and API traffic will get sealed into the archive for eternity!

**Having every archive you save be tainted with PII severely limits the utility of archiving.**

Now you can't *share* these archives with anyone who you wouldn't share your login with.

<br/>

So you have to make a choice, do you:

<br/>

### 🤖 Create a separate fake account on every website for the archiver to use?

<img src="https://docs.monadical.com/uploads/95bb457f-bf03-4877-836e-2c46eaf583ed.png" style="max-width: 240px; float: right; margin: 5px; border-radius: 4px"/>

You can create fake "sockpuppet" accounts on every site you want to capture, and invite those accounts to any private spaces or groups you want to archive so they have read access. This isn't perfect because even if leaked credentials only belong to a bot, it still gives an attacker the ability to lock you out and take over the bot.

- **pros:** limits exposure in case headers are leaked, can restrict user access just for archiving

- **cons:** *very* tedious to maintain a fleet of fake accounts, bot-only accts are easily detected

***OR***

### 👤 Archive with your personal accounts and try to mitigate the security risk?

This is risky-by-default and requires constant active intervention to avoid disaster. A failure of any one of the approaches below can lead to immediate life-altering consequences.

Imagine you save an interesting Google search result and share the [warc](https://en.wikipedia.org/wiki/WARC_(file_format)) with a close friend. They think it's funny and innocently post it on Reddit for laughs, not realizing that they just made your Google/Gmail login credentials completely public to the world. From there, in 30 seconds someone can lock you out of your entire digital life by just logging in as you and requesting password reset emails from other sites.

<br/>

<img src="https://docs.monadical.com/uploads/29d37bdf-43e1-412b-b345-91a09cbe2d7c.png" style="max-width: 100%"/>

<br/><br/>

<img src="https://docs.monadical.com/uploads/cf890d3d-de8a-43b9-b061-cad7cd87efe9.png" style="max-width: 290px; float: right; margin-top: 15px"/><br/>

Cookie theft used to be a much more popular form of attack (remember [firesheep](https://en.wikipedia.org/wiki/Firesheep)?), but people mostly worry about malicious browser extensions selling off their data, not "static" archive files these days. If we all start archiving more of our social media / private content, that will change.

<br/><br/>

<img src="https://docs.monadical.com/uploads/3fafb489-d24f-44a1-956d-66e1449a0676.png

"/>

<br>

---

### Archiving Approaches

<br/>

- 🐚 **Paranoid hermit mode: Never share any archive data with anyone.**

Owning a big pile of tainted data is still a huge liability, you have to guard it like a password!

- **Cons:** archives are now confined to the individuals that save them, which turns deciding to share archives into a zero sum situation. Making a capture public might benefit society but it will likely harm the archivist that created it by leaking their login credentials. Even saved pages that look harmless with *no visible PII* can contain a saved cookie or API key that allows for complete takeover of their accounts.

- 🫨 **Anxiety helicopter mode: Try to log out of sites after every crawl** & detect/scrub PII.

This is only feasible at scale with help from automated tooling (AI QA + browser agents).

- **Cons:** imperfect. Logout might fail. You have to trust sites to actually invalidate old cookies on logout. It doesn't work for JWT or other long-lived API tokens or secrets embedded in the capture. Doesn't address PII in the HTML like your email, name, notifications, etc.

- 📅 **Zen patience mode: Archives are secret by default and time-unlock after 10 years.**

After so much time all secret API keys or cookies would have expired, making captures safe*r* to share with the public. *(beware: not all PII expires!)*

- **Cons:** destroys the short-term value of archiving (making immediately-shareable backup copies of sites), research / evidence collection / censorship avoidance can't be done without exposing the archivist. It hinders realtime integration with other apps that could actually make your archive dataset useful day-to-day.

- **Pros:** fairly safe, unless you set up auto-archiving with no URL filtering. It's very easy to accidentally capture a [plaintextoffender](https://plaintextoffenders.com/) or sensitive Google doc by accident and lose track of it in a sea of everything else you're saving.

- ⚖️ **Risk-Tolerant Mode: Allow sharing screenshots & plain text from logged-in pages.**

Currently the best practical solution, balances risk vs reward for each output type and only allows sharing of outputs where any included PII is plainly visible, up to the human to catch.

- **Screenshot**: ✅ (only reveals what is plainly visible, no hidden PII)

- Screenrecording: ✅ (only reveals what is plainly visible, no hidden PII)

- Plain text: ✅ (only reveals what is plainly visible, no hidden PII)

- Static html (no JS): ❌ (potentially risky, even static html can contain hidden PII)

- HTML with JS: ❌ (contains JWT tokens / cookies / API keys / other PII)

- WARC: ❌ (contains all JWT tokens / cookies / API keys / other PII)

- TCPdump/HAR: ❌ (contains all JWT tokens / cookies / API keys / other PII)

---

<br/>

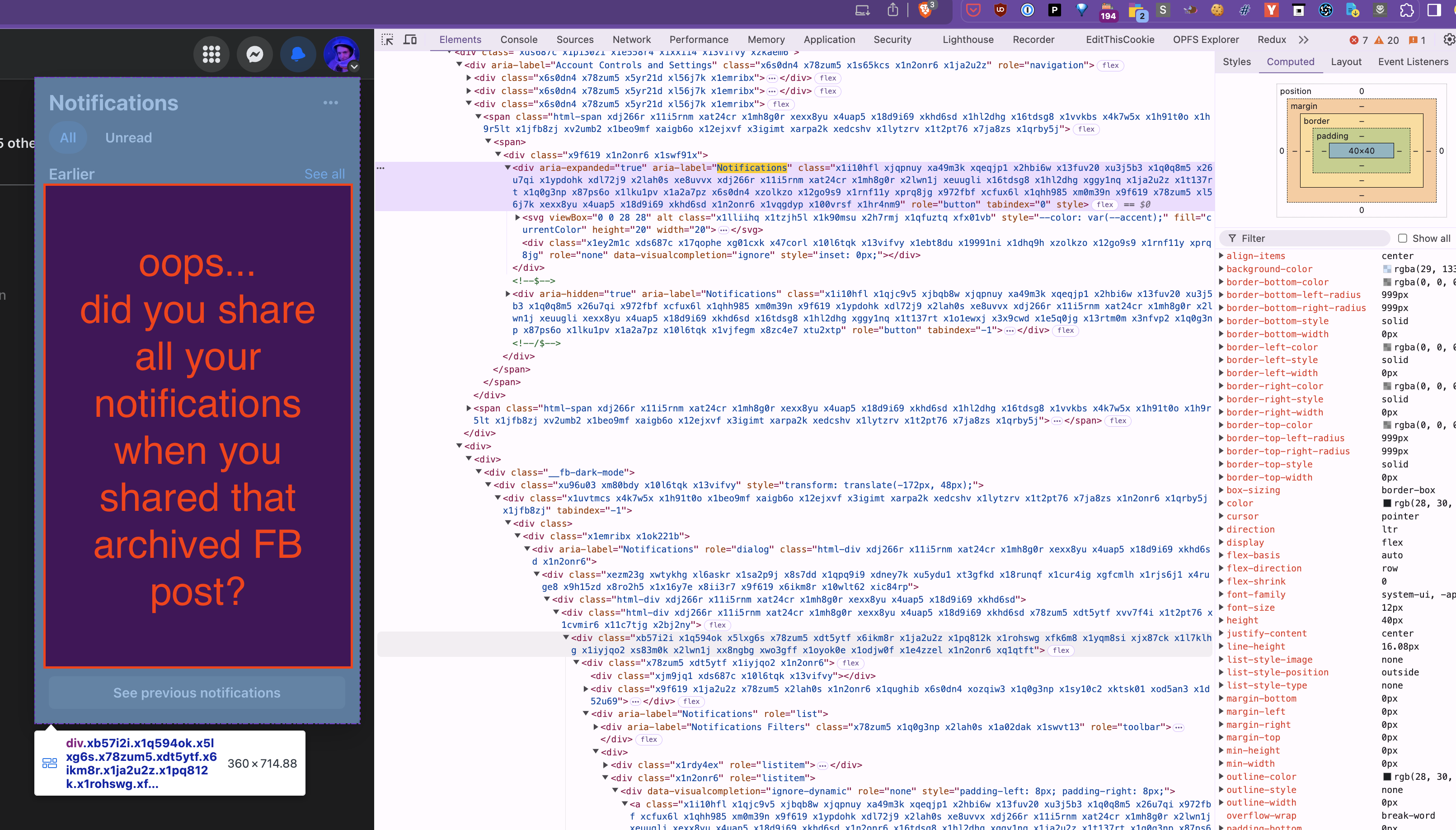

> **Even screenshots can contain [PII](https://en.wikipedia.org/wiki/Personal_data?useskin=vector)**, but at least it's easily spotted.

As a practical solution we can allow people to share screenshots of logged-in pages, because presumably the user can see any PII themselves in the screenshot and decide not to share it. We can only promise that there is no *invisible* PII unless the page does some sort of stenography. With screenshots you just get what you see, and what you see might include PII.

On social media sites that can still mean you reveal the logged-in user's username, full name, profile picture, and recent notifications in the upper right hand corner. On messaging apps like Discord, all your server and channel names might be exposed because they're in the sidebar.

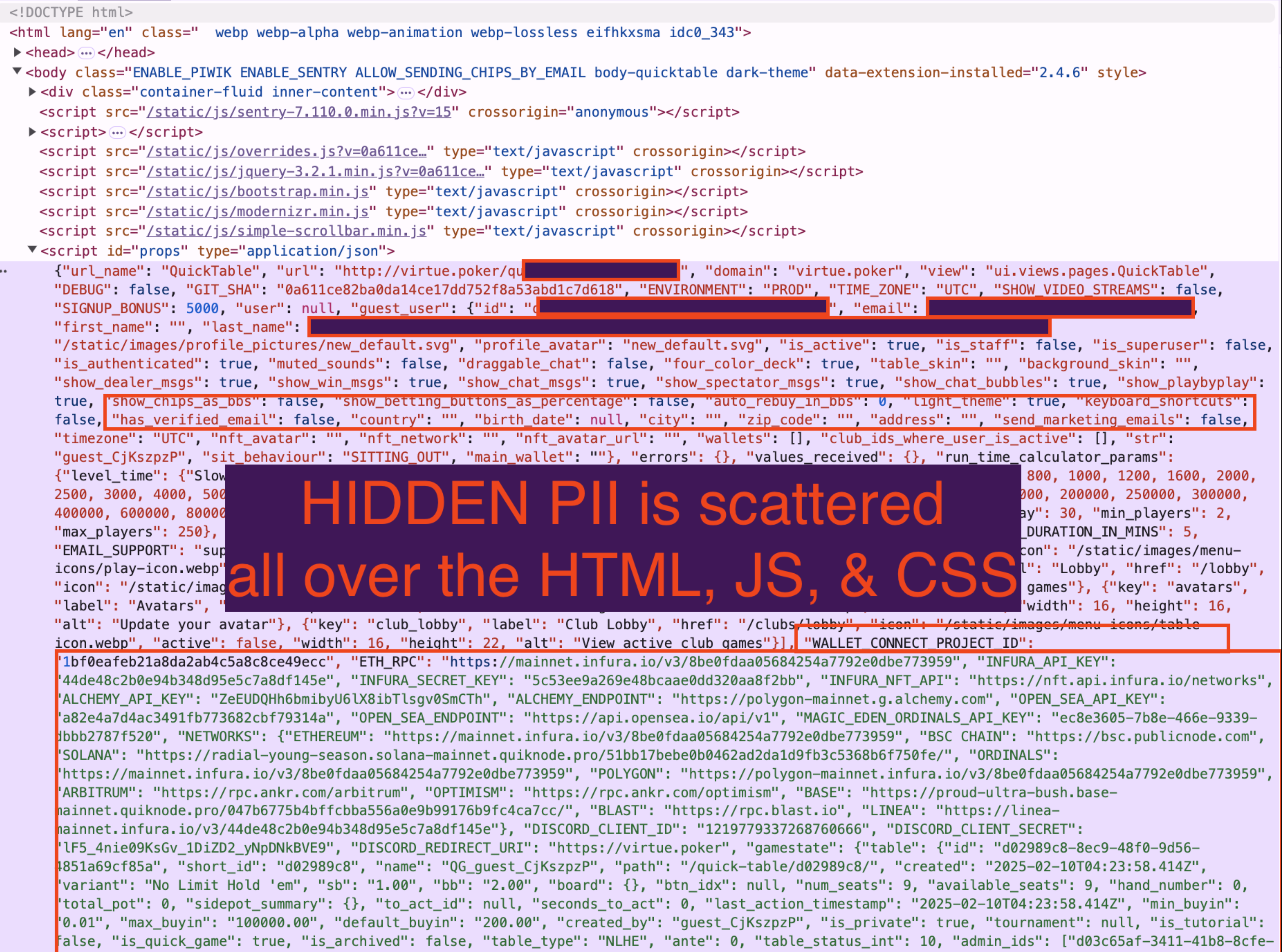

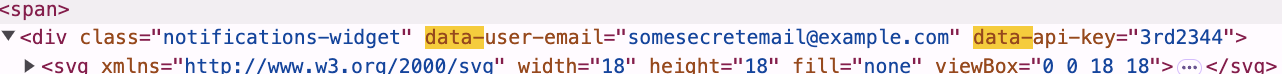

> **Recommended principle: prevent users from inadvertently sharing *hidden* PII.**

No matter how hard you try, all html captures (even when stripped of all JS) can contain hidden PII (e.g. user UUID, API keys, name, email, etc.) embedded in `data-` attributes, server resource URLs, `data:base64` URLs, css, etc. On docs apps like Google Docs, attempting to archive one doc might inadvertently reveal the secret URLs for 5 other docs you own because they're rendered in the `File > Open Recent...` menubar html. It's almost never safe to share HTML unless it's been sanitized *by hand* (or by AI?).

<br/>

> **[HTML/CSS/JS are very hard to sanitize after-the-fact](#)** without breaking them.

Most modern websites are not static pages anymore. Instead, they are a gaggle of Turing-complete VMs in a trenchcoat redrawing the image in front of your face every 16ms. JS, WASM, WebGPU, WebRTC, hover states, service workers, etc. all contribute to the difficulty of making captures meaningfully replayable.

<br/>

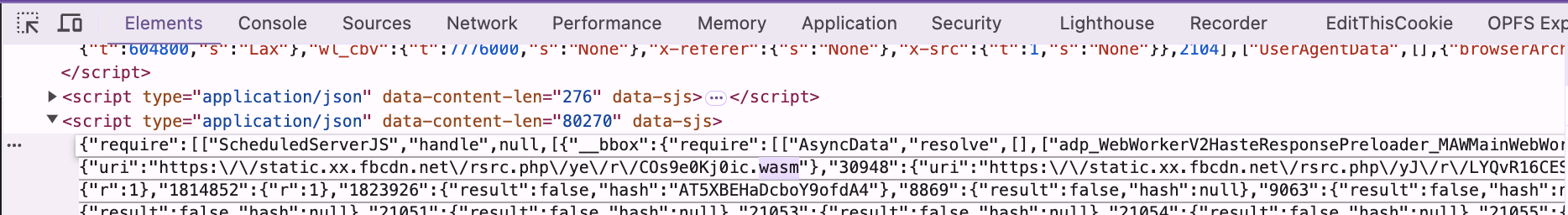

> **Websites are Turing-complete.**

These little Turing-complete VMs get very mad at you when you mess with any of their inputs and outputs, because they do all sorts of subresource integrity checking and signature verification (e.g. MD5's and [HMAC](https://en.wikipedia.org/wiki/HMAC)s on JSON requests, [CSP hashes](https://content-security-policy.com/hash/), etc.). It's hard enough as-is getting sites to run with byte-for-byte perfectly replayed IO; every extra sanitization perturbance causes 3 new things to break elsewhere. If you try to tamper with responses to santize PII, you end up having to tamper with the code that checks the response signatures too.

> But we need some way to disentangle authentication tokens from Turing-complete JS code, arbitrary HTML, API responses, WebSocket messages, protobufs, gzips, etc. and more... without breaking any of those things in the process.

The only reason saving modern JS pages is possible is because archived frontend code can't tell the difference between a real backend and the archive server emulating one. Archive [replayers](https://webrecorder.net/replaywebpage/) only work because you can play back the original captured IO byte-for-byte without having to understand any of the content.

> "If you wish to make an apple pie from scratch, you must first invent the universe."

When you decide you need to start tampering with captures to make them safe, you're suddenly faced with the impossible task of *parsing every single byte that any website could send you that might contain a secret in any format*. It's even beyond the scope of current AIs. Base64-encoded [HMAC](https://en.wikipedia.org/wiki/HMAC)'d responses are a *regular* occurrence on the web, not an edge case, and updating a line within one of those—in-place without breaking it—is still beyond the reach of current AI and cryptography tech (as of 2025/01).

The moment you start poking around inside the captured traffic trying to modify stuff, the whole house of cards comes tumbling down because many of the big-tech media platforms perform signature/hash checking of their AJAX and content requests.

The better the archiving tool is at emulating every feature of the modern web, the more APIs and formats you'll have to delicately sanitize without breaking them.

---

### One Possible Approach

- Tag all captured content with one of three permissions: 🟢 🟡 🔴

- 🟢: Safe to share with untrusted public viewers, no PII / sensitive cookies within

full html, js, request/response data can be stored unencrypted & shared freely

- 🟡: Only safe to share html with certain users, html is unsanitized & contains PII 🍪!

ACL can be managed by Django permissions system or SAML/other API/etc.

full html, js, request/response data should be stored encrypted at rest w/ [Shamir's keys](https://en.wikipedia.org/wiki/Shamir%27s_secret_sharing)

- 🔴: HTML is not safe to share with anyone beyond the user that created it

Only allow screenshots 🖥️ and plaintext ✏️ versions to be shared.

Full html, js, request/response data should be stored encrypted at rest with a single key

- Permissions can be applied to any record, and recursively apply to children unless overridden:

- browser profile

- crawl

- page snapshot

- individual result subfiles within a snapshot

- UI allows you to change the permissions label on a record

- Show big warnings whenever you bump a record to a more permissive label

- List exactly which accounts used for the capture are burned by sharing it with a bigger audience

- Do not allow making public hotlinks directly to any 🔴 records

- If you generate a unique share link that is password protected or has an expiration <30 days then you can link to any type of record

- How should you apply these permissions?

- If you are archiving using your personal accounts, mark your profile 🔴 so all records created using it are kept locked down to only be accessible by you.

This is only suitable for individuals archiving for private use, not for orgs or individuals that want to share their captures with others.

- If you are using a dedicated archiving browser profile with bot burner accounts, use 🟡.

This works best for organizations and businesses; multiple burner accounts can be used to limit access to content very granularly. Access is restricted to logged-in abx users or via unique one-time links with expiration/password.

- The default profile is always 🟢 and represents a public anonymous visitor with no auth.

Can be used to test out the archiving process without setting up logins & profiles.

Just visits pages as a non-logged-in user would see them.

Allows you to publicly rehost and hotlink to some capture content as long as you respond to DMCA notices and/or qualify for fair use.

These captures can be uploaded to Archive.org and other public repositories.

---

### Brainstorming Ideas for 2030

- In the future, we could encrypt individual records with different keys linked to the cookies used to perform the archiving. This could tie the permissions together such that only the users with the decryption key for the cookies can access the decryption key for the archived content.

- Then to "burn" some credentials voluntarily and make its content public all you have to do is publish the key to both on a messageboard.

- If capture access is coupled to profile access, it will be much harder to accidentally share a capture without being forced to explicitly share the entire profile.

- It prevents mistakes of overconfidence in thinking a capture is sanitized enough to not burn the account that was used.

- But it harms usability in cases where security doesn't matter that much, but logins are practically needed to access the content without getting blocked (youtube/soundcloud/tiktok/fb/insta/x/etc).

- "Mining" for a P2P archiving network could be doing proof-of-work to unlock time-locked archives, proof-of-work to do crawling, or proof-of-existence verifying that archived snapshots still exist.

- You could use FHE and some sort of zk-proof to compute the AND intersection of a group of people's archive's of a single site without revealing everyone's individual inputs.

- This would eliminate the user-specific content and only show the intersection of what everyone sees.

- PII (or anything that varies per-user) will be obscured with "fog of war" style noise in the screenshot.

- Anything that *multiple* users can see on a page can be marked shareable once they do a zk-proof to find the intersection of their individual results, without ever sharing the full cleartext.

<br/>

---

---

<br/>

#### Further Reading

- ⭐ [Private Set Intersection for Web Content Anonymization](https://docs.monadical.com/s/fXBCCklDJ) + https://github.com/pirate/html-private-set-intersection

- [Digital Public Infrastructure for Community Privacy, Agency, and Consent ](https://docs.google.com/document/d/1nEw5UJfBCxp_XVNuF8jsvokAIm8sLak35YxmSyLKghM/edit?tab=t.0)

- https://en.wikipedia.org/wiki/Oblivious_transfer

- https://en.wikipedia.org/wiki/Commitment_scheme

- ⭐ https://docs.tlsnotary.org/

- https://www.emergentresearch.net/

- https://prove.email/ / https://zk.email/

- https://par.nsf.gov/servlets/purl/10109463

- https://security.stackexchange.com/questions/103645/does-ssl-tls-provide-non-repudiation-service

<br/>

<br/>

---