<center>

# How to Make LLMs Speak Your Language

<big>

**A Review of the Top Tools for Generating Structured Output**

</big>

*This is an applied research report by Michał Flak and JDC for Monadical Labs, where we write about emerging technologies. Originally published on 2023-12-20 on the [Monadical blog](https://monadical.com/blog.html).*

</center>

## Introduction

Businesses today rely on seamless and intuitive communication to thrive, and Large Language Models (LLMs) have emerged as powerful tools that promise to transform how we communicate. In our previous article, [“To Fine-Tune or Not Fine-Tune: Large Language Models for AI-Driven Business Transformation](https://monadical.com/posts/fine-tune-or-not-llm-ai-business-transformation.html),” we explored how LLMs can enhance various aspects of business operations. In this article, we will focus on one specific application of LLMs that holds incredible promise and is rewriting the rulebook for customer interactions, process optimization, and data handling: **generating text from an LLM that adheres to a predefined output format.**

When LLMs provide answers and advice, they often use words in a way that feels like a conversation. But to make LLMs work with other computer programs or systems, they need to follow certain guidelines.

For instance, in the domain of computational interaction, the communicative efficacy between LLMs and external systems hinges upon their adherence to a predefined output format.

Getting LLMs to adhere to a specific output format, like a JSON object or even code, presents a challenge, as autoregressive LLMs are often unpredictable and inherently [lack controllability](https://drive.google.com/file/d/1BU5bV3X5w65DwSMapKcsr0ZvrMRU_Nbi/view). How can we balance the creativity of language generation with the need for structured output, and which tools are available to help in this endeavour? In this article, we’ll review different approaches, from open-source packages to simple yet effective solutions. We will also show you how to implement a smart text interface using an LLM that can generate text in a specific output format.

But first, let’s examine how LLMs generate output in the first place.

## How do LLMs generate output?

To see how an LLM can follow a certain output structure, our focus will be on a causal transformer model, where the goal is to predict the (N + 1)th token based on a sequence of N tokens. This framework provides a basis to examine the strategies that steer LLMs toward producing structured output.

Let’s start with a simplified overview of the two key components within the transformer model:

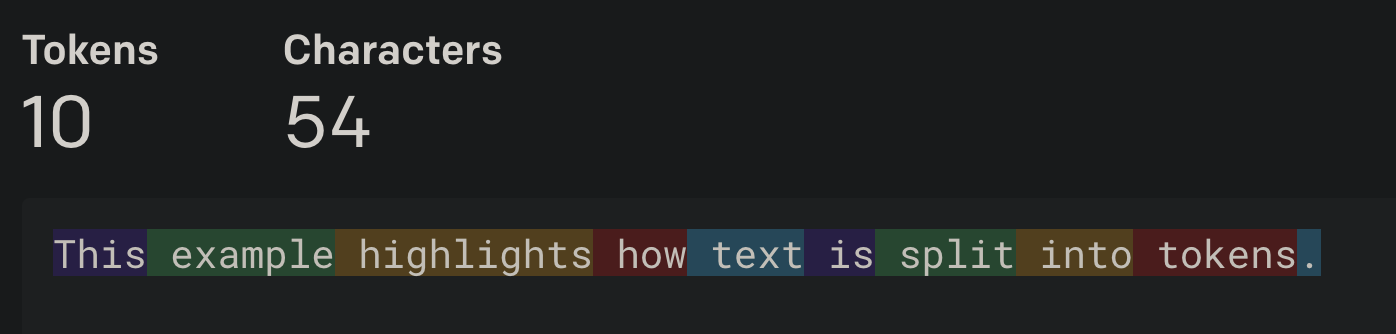

* **The Tokenizer:** A tokenizer is a component that takes in text and breaks it down into smaller units called tokens. These tokens could be words or even smaller parts, like subwords. The tokenizer’s job is to prepare the text so that a computer can work with it more efficiently. This component transforms words into a one-hot encoded token vector. Available tokens form the LLM’s vocabulary.

* **The Transformer:** The transformer is a sophisticated system that processes text by paying special attention to the relationships between different words or tokens. It does this through self-attention, which lets it focus on different parts of the text to understand how they connect. This helps the transformer capture the meaning and context of the language more effectively, making it a powerful tool for tasks like understanding, generating, and translating text. It’s like having an incredibly smart reader that understands each word and sees how they all fit together to create a bigger picture. The crux of the model, the transformer, converts N tokens into a predictive context for the (N + 1)th token, propelling the sequential nature of the model’s output.

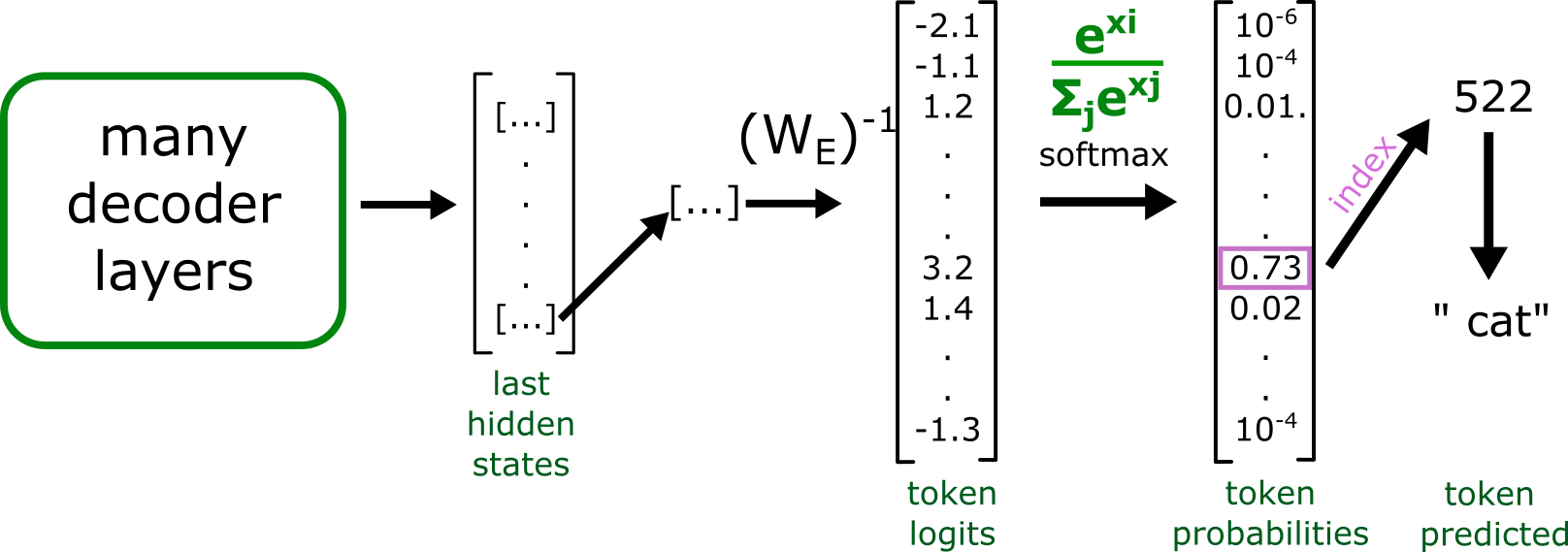

For this report, it’s sufficient to explain the very last layers of the transformers: the logits layer, softmax function, and the resulting token probabilities.

*Source: [LLM Basics: Embedding Spaces - Transformer Token Vectors Are Not Points in Space](https://www.lesswrong.com/posts/pHPmMGEMYefk9jLeh/llm-basics-embedding-spaces-transformer-token-vectors-are)*

Token logits form the raw output of the final hidden state vector multiplied by the unembedding matrix. From this operation, we get “logits” - unnormalized log probabilities for **each possible token in the vocabulary.**

This is then passed through softmax - a function whose results all sum up to 1, giving us a probability distribution over the tokens.

Subsequently, we can sample the (n+1)th token from this distribution. Sampling with temperature=0 means that the token with the highest probability assigned is always chosen, making the model deterministic. Sampling with temperature=1 means that we choose according to the probability distribution - we have many possible options with varying likelihoods of being selected.

## Why guide the model output?

Let’s consider building a sentiment classifying model, which works by processing a piece of text to determine the emotional undertone of the message. We’d also like to use this model reliably in our application; for example, we might want to build a trading bot, which acts on analyzing thousands of tweets containing a name or ticker (stock symbol) of the company, and then trade on the X (Twitter) user’s sentiment.

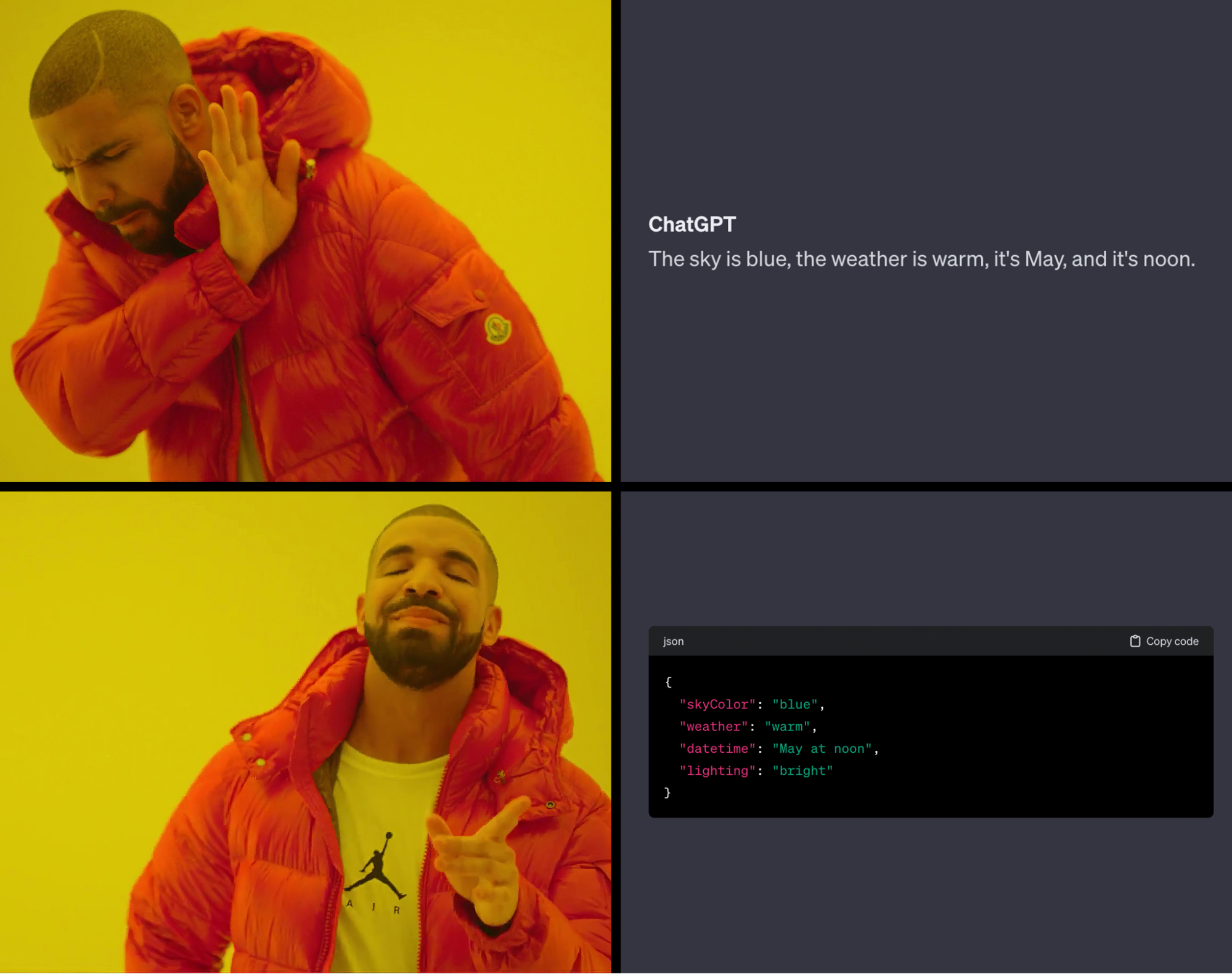

Our first hunch might be to ask ChatGPT for an answer.

>Is the emotional sentiment of the tweet:

“XYZ to the moon! 💸💰📈”

Positive or negative?

What we get:

>The emotional sentiment of the tweet “XYZ to the moon! 💸💰📈” is positive. The use of phrases like “to the moon” along with positive emojis like 💸💰📈 suggests excitement and positivity about the potential increase in the value or success of XYZ.

Cool! We get a well-reasoned answer to our question. But now we have another problem: how to use that output in our app? Parsing that seems near impossible. Ideally, we would like to get one of these two output strings:

* Positive

* Negative

We can try prompt engineering techniques. Instead, let’s be more specific and ask the model what we want:

>Is the emotional sentiment of the tweet:

“XYZ to the moon! 💸💰📈”

Positive or negative?

Answer only using one word, either “Positive” or “Negative”

We get:

>Positive

Great! Suppose then we want to use a smaller, open-source model. For the same prompt using `ggml-mpt-7b-instruct` we get:

>The user input is a positive statement about XYZ.

As we can see, **we cannot rely on prompt engineering alone.** No matter how many few-shot examples we use, we nevertheless run into the risk of failure to adhere. There’s always a risk of failure purely due to the probabilistic nature of LLMs.

## Logit masking

Having access to the logits layer, we can manipulate it to steer the model in the direction we want.

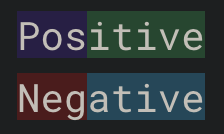

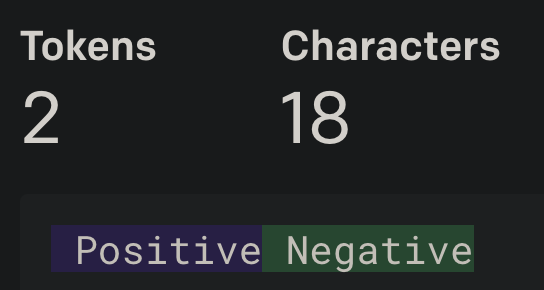

Let’s consider our example. We can look at how our allowed answers split into tokens:

The available token set contains these words as single tokens, but with a preceding space. Let’s take advantage of this quirk and simplify the example:

These correspond to tokens with IDs ``[33733, 36183]``.

Now, we can perform a trick. Let’s assign 0 to every row in the token logits vector, except for IDs ``[33733, 36183]``. **This means that only these two tokens are left as possible choices.** After going through `softmax`, only these two tokens are left with a non-zero probability - we are **guaranteed** to get one of them.

We are not limited to static token filtering - sequences of tokens can be masked depending on the completion generated so far - we can, for example, mask to match a regular expression (example: a decimal number), a context-free grammar (example: JSON), or whatever we want using custom programmatic rules. This way, we are certain to get structured output for downstream use in the application.

## Example: Natural language invocation of a CLI app

Consider a simple Python CLI program - an image format converter. It’s going to accept three arguments:

- Input image

- Output image

- Output format

If argument parsing is invalid, the program will interpret the first argument as a natural language query, and generate an invocation of itself to fulfill that query.

```python

import argparse

from lark import Lark

import sys

import os

def main():

# Create the parser

parser = argparse.ArgumentParser(description='Image format converter.')

# Add arguments to the parser

parser.add_argument('-i', '--input', help='Input image.', required=True)

parser.add_argument('-o', '--output', help='Output image. If not provided, the output will have the same name as the input image.')

parser.add_argument('-f', '--format', choices=['png', 'jpg', 'bmp'], help='Output format.', required=True)

try:

args = parser.parse_args()

print(args)

return

except:

pass

from transformers import AutoModelForCausalLM, AutoTokenizer

from parserllm import complete_cf

model = AutoModelForCausalLM.from_pretrained("databricks/dolly-v2-3b")

tokenizer = AutoTokenizer.from_pretrained("databricks/dolly-v2-3b")

# Should be possible to generate from ArgumentParser

grammar = f"""

start: "python " SCRIPT ARGS

SCRIPT: "{os.path.basename(__file__)} "

ARGS: INPUT_ARG " " FORMAT_ARG " " (OUTPUT_ARG)?

INPUT_ARG: ("-i " | "--input ") STRING

OUTPUT_ARG: ("-o " | "--output ") STRING "." ALLOWED_FORMATS

FORMAT_ARG: ("-f " | "--format ") ALLOWED_FORMATS

ALLOWED_FORMATS: "png" | "jpg" | "bmp"

STRING: /[a-zA-Z0-9_\.\/]+/

"""

larkparser = Lark(grammar=grammar, parser='lalr', regex=True)

prompt = f"""Given the following help message of a Python CLI program:

```

{parser.format_help()}

```

Invoke the program to satisfy user's request.

Request:

{sys.argv[1]}

Output:

"""

# Now generate the output using parserllm, restricting available logits to the ones

# allowed by our grammar

print(complete_cf(prompt, larkparser, "",

tokenizer,

model,

max_new_tokens=20,

debug=True))

if __name__ == "__main__":

main()

```

Taking a closer look, we can see that:

1. Arguments are defined using `argparse`.

2. On parse success, we return successfully. On failure, we go further.

3. Next, the grammar is defined. `parserllm` is used as the guided generation library for this simple example, and that uses the [Lark parser generator](https://github.com/lark-parser/lark) - so we define the grammar in Lark format. (Note: In principle, it’s possible to generate the grammar from the ArgumentParser).

4. The prompt for the LLM is constructed. The `Help` message is fed into it, to give the model information about its options.

5. The suggestion is generated using the grammar, `parserLLM` and the `dolly-v2-3b` model, and then printed.

And here’s an example output:

```

➜ rag-experiments git:(master) ✗ python argparse_demo.py "Convert the file cat.png to jpg and save to cats/mittens.jpg"

usage: argparse_demo.py [-h] -i INPUT [-o OUTPUT] -f {png,jpg,bmp}

argparse_demo.py: error: the following arguments are required: -i/--input, -f/--format

valid next token: [regex.Regex('python\\ ', flags=regex.V0)]

step=0 completion=python

step=1 completion=python

valid next token: [regex.Regex('argparse_demo\\.py\\ ', flags=regex.V0)]

step=0 completion=arg

step=1 completion=argparse

step=2 completion=argparse_

step=3 completion=argparse_demo

step=4 completion=argparse_demo.

step=5 completion=argparse_demo.py

step=6 completion=argparse_demo.py

valid next token: [regex.Regex('(?:\\-\\-input\\ |\\-i\\ )[a-zA-Z0-9_\\.\\/]+\\ (?:\\-\\-format\\ |\\-f\\ )(?:png|jpg|bmp)\\ (?:(?:\\-\\-output\\ |\\-o\\ )[a-zA-Z0-9_\\.\\/]+\\.(?:png|jpg|bmp))?', flags=regex.V0)]

step=0 completion=--

step=1 completion=--input

step=2 completion=--input cat

step=3 completion=--input cat.

step=4 completion=--input cat.png

step=5 completion=--input cat.png --

step=6 completion=--input cat.png --format

step=7 completion=--input cat.png --format j

step=8 completion=--input cat.png --format jpg

step=9 completion=--input cat.png --format jpg --

step=10 completion=--input cat.png --format jpg --output

step=11 completion=--input cat.png --format jpg --output cats

step=12 completion=--input cat.png --format jpg --output cats/

step=13 completion=--input cat.png --format jpg --output cats/mitt

step=14 completion=--input cat.png --format jpg --output cats/mittens

step=15 completion=--input cat.png --format jpg --output cats/mittens.

step=16 completion=--input cat.png --format jpg --output cats/mittens.jpg

python argparse_demo.py --input cat.png --format jpg --output cats/mittens.jpg

```

As seen here, a satisfactory result can be achieved even with a very small local model.

## Overview of tools for guiding LLM output

Below is a list of the tools we’ll explore, as well as a quick summary of their advantages and disadvantages. Keep reading for a deep dive into each one.

<table>

<thead>

<tr>

<th>Tool</th>

<th>Advantages</th>

<th>Disadvantages</th>

</tr>

</thead>

<tbody>

<tr>

<td><a href="https://platform.openai.com/docs/guides/function-calling">OpenAI function calling</a></td>

<td>

- Works with the most powerful LLMs from OpenAI<br>

- Black-box implementation

</td>

<td>

- Inflexible output format<br>

- No access to raw token probabilities<br>

- No guarantee of function call accuracy<br>

- Vendor lock-in

</td>

</tr>

<tr>

<td><a href=https://github.com/guidance-ai/guidance>Guidance</a></td>

<td>

- Compatible with Hugging Face and OpenAI<br>

- Valid output guaranteed<br>

- Rich prompt templating language<br>

- Regex matching<br>

- Generates only requested tokens<br>

- Removes tokenization artifacts<br>

- Popular and backed by a big corporation

</td>

<td>

- No token healing or pattern matching with OpenAI

</td>

</tr>

<tr>

<td><a href=https://github.com/microsoft/TypeChat>TypeChat</a></td>

<td>

- TypeScript library for non-Python users<br>

- Uses familiar TS type definitions

</td>

<td>

- Output not guaranteed; can fail with errors<br>

- Limited by prompt engineering and completion API<br>

- Only generates JSON objects<br>

- Only uses OpenAI API

</td>

</tr>

<tr>

<td><a href=https://github.com/1rgs/jsonformer>Jsonformer</a></td>

<td>

- Works with Hugging Face Transformers<br>

- Ensures valid and schema-compliant JSON output<br>

- More efficient than generating and parsing full JSON strings

</td>

<td>

- Limited in scope <br>

- Supports only a subset of JSON Schema

</td>

</tr>

<tr>

<td><a href=https://github.com/r2d4/rellm>ReLLM</a></td>

<td>

- Works with Hugging Face Transformers<br>

- Ensures valid output that matches regex pattern<br>

- Simple and easy-to-modify codebase

</td>

<td>

- Masks logits of non-matching tokens, affecting performance<br>

- Depends on use case and regex complexity<br>

- Only works with logit-biasing LLMs

</td>

</tr>

<tr>

<td><a href=https://github.com/r2d4/parserllm>parserLLM</a></td>

<td>

- Works with Hugging Face Transformers<br>

- Ensures valid output that matches CFG pattern<br>

- General purpose and perfect for DSLs and niche languages<br>

- Simple codebase and leverages popular Lark parser generator

</td>

<td>

- Parses whole sequence on each token, affecting speed and quality<br>

- Depends on use case and CFG complexity<br>

- Only works with logit-biasing LLMs

</td>

</tr>

<tr>

<td><a href=https://thiggle.com/>Thiggle</a></td>

<td>

- Easy to use - just call an API<br>

- Unique and reliable feature set among hosted options

</td>

<td>

- No info about LLMs used<br>

- Model might change without notice<br>

- Paid service

</td>

</tr>

<tr>

<td><a href=https://github.com/outlines-dev/outlines>Outlines</a></td>

<td>

- Works with Hugging Face Transformers<br>

- Ensures valid output<br>

- Extremely efficient

</td>

<td>

- Less featured than Guidance<br>

- No CFG support yet

</td>

</tr>

<tr>

<td><a href=https://lmql.ai/>LMQL</a></td>

<td>

- Works with Hugging Face Transformers and OpenAI<br>

- Ensures valid output with constraints<br>

- Rich feature set with Python scripting / templating

</td>

<td>

- No CFG support yet

</td>

</tr>

<tr>

<td><a href=https://github.com/jxnl/instructor>Instructor</a></td>

<td>

- Quick and easy way to get a Pydantic object from OpenAI

</td>

<td>

- OpenAI only<br>

- Function calling limitations apply<br>

- Relies on retrying on failure<br>

- Narrowly focused<br>

- Not very popular

</td>

</tr>

</tbody>

</table>

### 1. [OpenAI function calling](https://openai.com/blog/function-calling-and-other-api-updates)

In June 2023, OpenAI added a feature to their API, which allows you to define external APIs available to the model; this feature can also decide to call them to fetch useful information or perform an action.

For example, by providing a calculator API to the model, the model will then use it to perform a calculation (something LLMs are not particularly good at).

```python

class CalculateExpressionParams(pydantic.BaseModel):

expression: str

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=[

{"role": "user", "content": "What's (2 + 3) * 5?"}

],

functions=[

{

"name": "calculate_expression",

"description": "Perform a calculation",

"parameters": CalculateExpressionParams.schema()

}

],

function_call={"name": "calculate_expression"} # omit this line to make the model choose whether to call the function

)

output = json.loads(response.choices[0]["message"]["function_call"]["arguments"])

# {'expression': '(2 + 3) * 5'}

```

While OpenAI also offers [JSON mode](https://platform.openai.com/docs/guides/text-generation/json-mode), function calling remains the only way to precisely specify the output format from OpenAI LLMs. Due to limited API and lack of disclosing token probabilities, OpenAI’s capabilities lag behind self-hosted models in this respect.

There is no available information as to how the feature is implemented under the hood and to what extent the structure of the output is guaranteed - [OpenAI’s docs](https://platform.openai.com/docs/guides/gpt/chat-completions-api) only mention fine-tuning, and that by itself is insufficient to guarantee that:

>The latest models (`gpt-3.5-turbo-1106` and `gpt-4-1106-preview`) have been trained to both detect when a function should to be called (depending on the input) and to respond with JSON that adheres to the function signature more closely than previous models.

Based on this post, the model can also hallucinate, calling a non-existent function or messing up the arguments structure:

>On running this code, you might see ChatGPT sometimes generate function call for a function named “python” instead of “python_interpreter”

[…]

You should also notice that the model has messed up the arguments JSON as well. It gave the Python code directly as the value of the “arguments”, as opposed to giving it as a key-value pair of argument name and its value

Let’s look at the pros and cons of this tool:

**Pros:**

- Available with OpenAI’s LLMs, which are the most powerful ones as of the time of writing

**Cons:**

- Implementation is a black box

- Inflexible: you cannot completely freely define the output format

- OpenAI doesn’t provide access to the raw token probabilities on the last layer

- There is no guarantee that the functions will be called in the requested format; hallucinations have been reported

- Vendor lock-in

### 2. [Guidance](https://github.com/guidance-ai/guidance)

Guidance is a templating language and library from Microsoft. It recently received a major overhaul, moving away from the handlebars-like DSL syntax to pure Python.

Among the offered features is constrained generation with selects, regular expressions, and context-free grammars:

```python

Import guidance

from guidance import select, models, one_or_more, select, zero_or_more

llama2 = models.LlamaCpp(path)

# a simple select between two options

llama2 + f'Do you want a joke or a poem? A ' + select(['joke', 'poem'])

# regex constraint

lm = llama2 + 'Question: Luke has ten balls. He gives three to his brother.\n'

lm += 'How many balls does he have left?\n'

lm += 'Answer: ' + gen(regex='\d+')

# regex as stopping criterion

lm = llama2 + '19, 18,' + gen(max_tokens=50, stop_regex='[^\d]7[^\d]')

# context free grammars

# stateless=True indicates this function does not depend on LLM generations

@guidance(stateless=True)

def number(lm):

n = one_or_more(select(['0', '1', '2', '3', '4', '5', '6', '7', '8', '9']))

# Allow for negative or positive numbers

return lm + select(['-' + n, n])

@guidance(stateless=True)

def operator(lm):

return lm + select(['+' , '*', '**', '/', '-'])

@guidance(stateless=True)

def expression(lm):

# Either

# 1. A number (terminal)

# 2. two expressions with an operator and optional whitespace

# 3. An expression with parentheses around it

return lm + select([

number(),

expression() + zero_or_more(' ') + operator() + zero_or_more(' ') + expression(),

'(' + expression() + ')'

])

grammar = expression()

lm = llama2 + 'Problem: Luke has a hundred and six balls. He then loses thirty six.\n'

lm += 'Equivalent arithmetic expression: ' + grammar + '\n' # output: 106 - 36

```

Guidance works with local models through LlamaCpp and Hugging Face Transformers libraries, as well as remote LLM API providers, such as Cohere, Vertex AI and OpenAI.

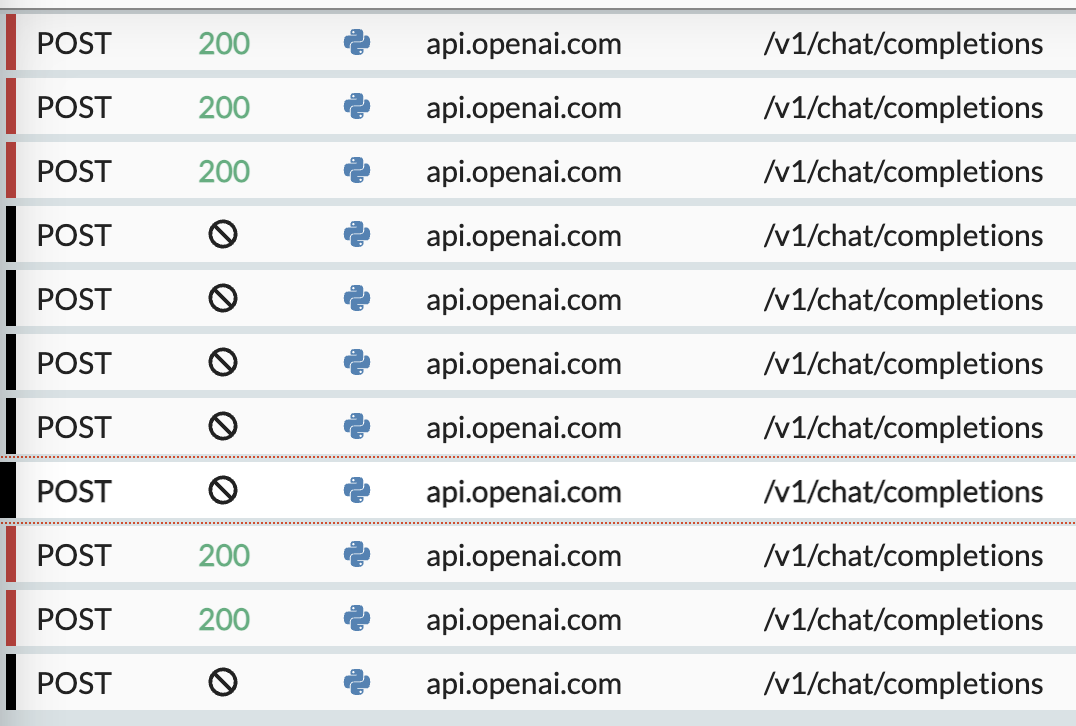

While the library tries to offer the same feature set for both local models and remote APIs, the latter have severe limitations. With a local model, the library can directly access token probabilities and influence sampling. This is not possible with APIs, and the library tries to compensate by retrying calls repeatedly until it matches what we want on the client side.

Here’s an example of constrained generation with OpenAI:

```python

gpt = models.OpenAI("gpt-3.5-turbo")

with system():

lm = gpt + "You are a cat expert."

with user():

lm += "What is an example of a small cat breed?"

with assistant():

lm += "A small cat breed is a " + select(['Maine coon', 'Siamese'])

```

Result:

```

Exception: We have exceeded the maximum number of calls! 10

```

And we can indeed see that a lot of requests were made when executing the above code:

Overall, Guidance has an impressively comprehensive feature set, which turns it into a very powerful tool for both constructing prompts and guiding LLMs. It’s also pleasant to use, employing pure Python to specify generation logic.

**Pros:**

* Compatible with Hugging Face Transformers, and to a lesser extent with OpenAI

* Guarantees valid output

* Prompt templating language with large feature set including loops and conditionals

* Regex matching

* Generates only requested tokens

* Removes tokenization artifacts (“token healing”)

* Popular, backed by a big corporation

**Cons:**

* Token healing, pattern matching [not available with OpenAI](https://github.com/guidance-ai/guidance/blob/main/guidance/llms/_openai.py#L618)

### 3. [TypeChat](https://github.com/microsoft/TypeChat)

TypeChat is a library from Microsoft, which generates valid JSON output matching a schema. By specifying a TypeScript type, the library will attempt to persuade the LLM to create a valid object of the type, which can then be used immediately in an application without any additional parsing.

There’s no guarantee of that ever happening though, due to a limited approach based solely on brute-force through prompt engineering and output validation.

Let’s examine a sentiment analysis example from the library’s [docs:](https://microsoft.github.io/TypeChat/blog/introducing-typechat/)

```typescript

// ./src/sentimentSchema.ts

// The following is a schema definition for determining the sentiment of a some user input.

export type SentimentResponse = {

/** The sentiment of the text (as typescript string literal union). */

sentiment: "negative" | "neutral" | "positive";

}

// ./src/main.ts

import * as fs from "fs";

import * as path from "path";

import dotenv from "dotenv";

import * as typechat from "typechat";

import { SentimentResponse } from "./sentimentSchema";

// Load environment variables.

dotenv.config({ path: path.join(__dirname, "../.env") });

// Create a language model based on the environment variables.

const model = typechat.createLanguageModel(process.env);

// Load up the contents of our "Response" schema.

const schema = fs.readFileSync(path.join(__dirname, "sentimentSchema.ts"), "utf8");

const translator = typechat.createJsonTranslator<SentimentResponse>(model, schema, "SentimentResponse");

// Process requests interactively.

typechat.processRequests("😀> ", /*inputFile*/ undefined, async (request) => {

const response = await translator.translate(request);

if (!response.success) {

console.log(response.message);

return;

}

console.log(`The sentiment is ${response.data.sentiment}`);

});

```

Only OpenAI API is supported by the official repository, although it’s possible to use it with a self-hosted model by modifying the code (see [llama.cpp](https://github.com/abichinger/typechat-llama-example/tree/main), for example).

However, the use of OpenAI’s own API limits the library significantly, since it relies solely on the unmodified [completion API](https://github.com/microsoft/TypeChat/blob/main/src/model.ts#L23C55-L23C55), shifting the responsibility to generate the allowed tokens on the model. Upon failure, the LLM is politely asked to generate once again, brute-forcing until a valid object is returned but providing zero guarantee this will ever happen.

The limitations are clear, simply by looking at the [codebase](https://github.com/microsoft/TypeChat/blob/main/src/program.ts) - the approach is based on prompt engineering and validating the returned string as JSON:

```typescript

function createRequestPrompt(request: string) {

return `You are a service that translates user requests into JSON objects of type "${validator.typeName}" according to the following TypeScript definitions:\n` +

`\`\`\`\n${validator.schema}\`\`\`\n` +

`The following is a user request:\n` +

`"""\n${request}\n"""\n` +

`The following is the user request translated into a JSON object with 2 spaces of indentation and no properties with the value undefined:\n`;

}

function createRepairPrompt(validationError: string) {

return `The JSON object is invalid for the following reason:\n` +

`"""\n${validationError}\n"""\n` +

`The following is a revised JSON object:\n`;

}

```

The library tries twice and gives up if valid output hasn’t been generated by the LLM:

```typescript

async function translate(request: string, promptPreamble?: string | PromptSection[]) {

const preamble: PromptSection[] = typeof promptPreamble === "string" ? [{ role: "user", content: promptPreamble }] : promptPreamble ?? [];

let prompt: PromptSection[] = [...preamble, { role: "user", content: typeChat.createRequestPrompt(request) }];

let attemptRepair = typeChat.attemptRepair;

while (true) {

const response = await model.complete(prompt);

if (!response.success) {

return response;

}

const responseText = response.data;

const startIndex = responseText.indexOf("{");

const endIndex = responseText.lastIndexOf("}");

if (!(startIndex >= 0 && endIndex > startIndex)) {

return error(`Response is not JSON:\n${responseText}`);

}

const jsonText = responseText.slice(startIndex, endIndex + 1);

const schemaValidation = validator.validate(jsonText);

const validation = schemaValidation.success ? typeChat.validateInstance(schemaValidation.data) : schemaValidation;

if (validation.success) {

return validation;

}

if (!attemptRepair) {

return error(`JSON validation failed: ${validation.message}\n${jsonText}`);

}

prompt.push({ role: "assistant", content: responseText });

prompt.push({ role: "user", content: typeChat.createRepairPrompt(validation.message) });

attemptRepair = false;

}

}

```

**Pros:**

* Features a TypeScript library - a rare non-Python entry

* Uses a language already known among developers, TS type definitions

**Cons:**

* No guarantee of a valid output ever returned; it can throw error and fail, which misses the point of this exercise

* Limited approach based on prompt engineering and completion API (this is a limitation of OpenAI and other proprietary models)

* Only generates JSON objects

* Only uses OpenAI API

### 4. [Jsonformer](https://github.com/1rgs/jsonformer)

Jsonformer is a library that generates structured JSON from LLMs and guarantees the generation of valid JSON adhering to a specific schema.

Jsonformer is a wrapper over Hugging Face Transformers, so it works with self-hosted models. It works by selecting only valid tokens (adhering to a schema) at each step of generation. In addition, it only generates requested value tokens, filling in the scaffolding into the prompt - so it should be efficient.

This library fills the same role as TypeChat, but the approach taken is superior. The code accesses the logits directly and masks them to only allow valid ones. There’s no way this can fail to generate valid JSON adhering to the requested schema.

Let’s examine at this example from [github](https://github.com/1rgs/jsonformer) more closely:

```python

from jsonformer import Jsonformer

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("databricks/dolly-v2-12b")

tokenizer = AutoTokenizer.from_pretrained("databricks/dolly-v2-12b")

json_schema = {

"type": "object",

"properties": {

"name": {"type": "string"},

"age": {"type": "number"},

"is_student": {"type": "boolean"},

"courses": {

"type": "array",

"items": {"type": "string"}

}

}

}

prompt = "Generate a person's information based on the following schema:"

jsonformer = Jsonformer(model, tokenizer, json_schema, prompt)

generated_data = jsonformer()

print(generated_data)

```

**Pros:**

* Compatible with Hugging Face Transformers

* Guarantees valid output by ensuring that the generated JSON is always syntactically correct and conforms to the specified schema

* Efficient: by generating only the content tokens and filling in the fixed tokens, Jsonformer is more efficient than generating a full JSON string and parsing it

**Cons:**

* This depends on use case: Jsonformer is limited in scope and currently supports a subset of JSON Schema

### 5. [ReLLM](https://github.com/r2d4/rellm)

ReLLM allows users to constrain the output of a language model by matching it with a regular expression. This is achieved by only allowing tokens that match the regex at each step of generation. ReLLMs is built on top of the Hugging Face Transformers library.

Let’s take a closer look at this example from [github](https://github.com/r2d4/rellm):

```python

import regex

from transformers import AutoModelForCausalLM, AutoTokenizer

from rellm import complete_re

model = AutoModelForCausalLM.from_pretrained("gpt2")

tokenizer = AutoTokenizer.from_pretrained("gpt2")

prompt = "ReLLM, the best way to get structured data out of LLMs, is an acronym for "

pattern = regex.compile(r'Re[a-z]+ L[a-z]+ L[a-z]+ M[a-z]+')

output = complete_re(tokenizer=tokenizer,

model=model,

prompt=prompt,

pattern=pattern,

do_sample=True,

max_new_tokens=80)

print(output)

# Realized Logistic Logistics Model

```

Currently, this is implemented in a naive way - by looping through the entire token vocabulary at each set, and checking for regex matches. This is not the optimal approach and can be computationally expensive (see [Outlines](https://docs.monadical.com/s/structured-output-from-LLMs#8-Outlines) for details).

```python

def filter_tokens(self, partial_completion: str, patterns: Union[regex.Pattern, List[regex.Pattern]]) -> Set[int]:

if isinstance(patterns, regex.Pattern):

patterns = [patterns]

with ThreadPoolExecutor():

valid_token_ids = set(

filter(

lambda token_id: self.is_valid_token(token_id, partial_completion, patterns),

self.decoded_tokens_cache.keys()

)

)

return valid_token_ids

```

**Pros:**

* Compatible with Hugging Face Transformers

* Guarantees valid output that matches the specified regex pattern

* Has a very simple codebase that is easy to understand and modify

**Cons:**

* Suboptimal performance, as it masks the logits of the tokens that do not match the regex pattern

* Dependency on the use case and the complexity of the regex pattern

* Limited scope, as it only works with LLMs that support logit biasing

### 6. [ParserLLM](https://github.com/r2d4/parserllm)

ParserLLM is a library that extends ReLLM. Both work with Hugging Face Transformers. It constrains the output of an LLM to match a [context-free grammar (CFG)](https://www.geeksforgeeks.org/what-is-context-free-grammar/) and uses [Lark parser generator](https://lark-parser.readthedocs.io/en/stable/), a tool that creates parsers from grammar rules, to determine the valid tokens for each position in the output sequence.

However, it’s inefficient in the same way as ReLLM in that it parses the whole sequence for each vocabulary token (see [code](https://github.com/r2d4/parserllm/blob/main/parserllm/parserllm.py#L47)).

Here’s an example from [github](https://github.com/r2d4/rellm):

```python

model = AutoModelForCausalLM.from_pretrained("databricks/dolly-v2-3b")

tokenizer = AutoTokenizer.from_pretrained("databricks/dolly-v2-3b")

json_grammar = r"""

?start: value

?value: object

| array

| string

| "true" -> true

| "false" -> false

| "null" -> null

array : "[" [value ("," value)*] "]"

object : "{" [pair ("," pair)*] "}"

pair : string ":" value

string : ESCAPED_STRING

%import common.ESCAPED_STRING

%import common.SIGNED_NUMBER

%import common.WS

%ignore WS

"""

### Create the JSON parser with Lark, using the LALR algorithm

json_parser = Lark(json_grammar, parser='lalr',

# Using the basic lexer isn't required, and isn't usually recommended.

# But, it's good enough for JSON, and it's slightly faster.

lexer='basic',

# Disabling propagate_positions and placeholders slightly improves speed

propagate_positions=False,

maybe_placeholders=False,

regex=True)

prompt = "Write the first three letters of the alphabet in valid JSON format\n"

print(complete_cf(prompt, json_parser, "",

tokenizer,

model,

max_new_tokens=15,

debug=True))

print("regular\n", ' '.join(tokenizer.batch_decode(model.generate(tokenizer.encode(prompt, return_tensors="pt"),

max_new_tokens=30,

pad_token_id=tokenizer.eos_token_id,

))))

```

**Pros:**

* Compatible with Hugging Face Transformers

* Guarantees valid output that matches the specified context-free grammar pattern

* General purpose, generates according to any CFG definition

* Perfect for DSLs and niche languages

* Very simple codebase

* Leverages Lark - an already-popular parser generator - to create parsers from grammar rules

**Cons:**

* Suboptimal performance, as it parses the whole sequence on each token of the vocabulary (which may reduce the speed and quality of the completions)

* Depends on the use case and the complexity of the CFG pattern, which may not be able to handle more advanced or ambiguous structures

* Limited in scope, as it only works with LLMs that support logit biasing

### 7. [Thiggle](https://thiggle.com/)

Thiggle is a paid service that leverages ReLLM and parserLLM under the hood to offer a platform for using LLMs in various ways. Some of the features that Thiggle provides are:

* Categorization API

* Structured Completion APIs

* Regex Completion API

* Context-Free Grammar Completion API

However, Thiggle does not disclose which LLMs are used for these features, and the models might change without notice, which risks affecting the performance.

**Pros:**

* Easy to use - just call an API

* Unique, reliable feature set among hosted options

**Cons:**

* No info about the LLMs used

* Model might change without notice, causing a change in performance

* Paid service

### 8. [Outlines](https://github.com/normal-computing/outlines)

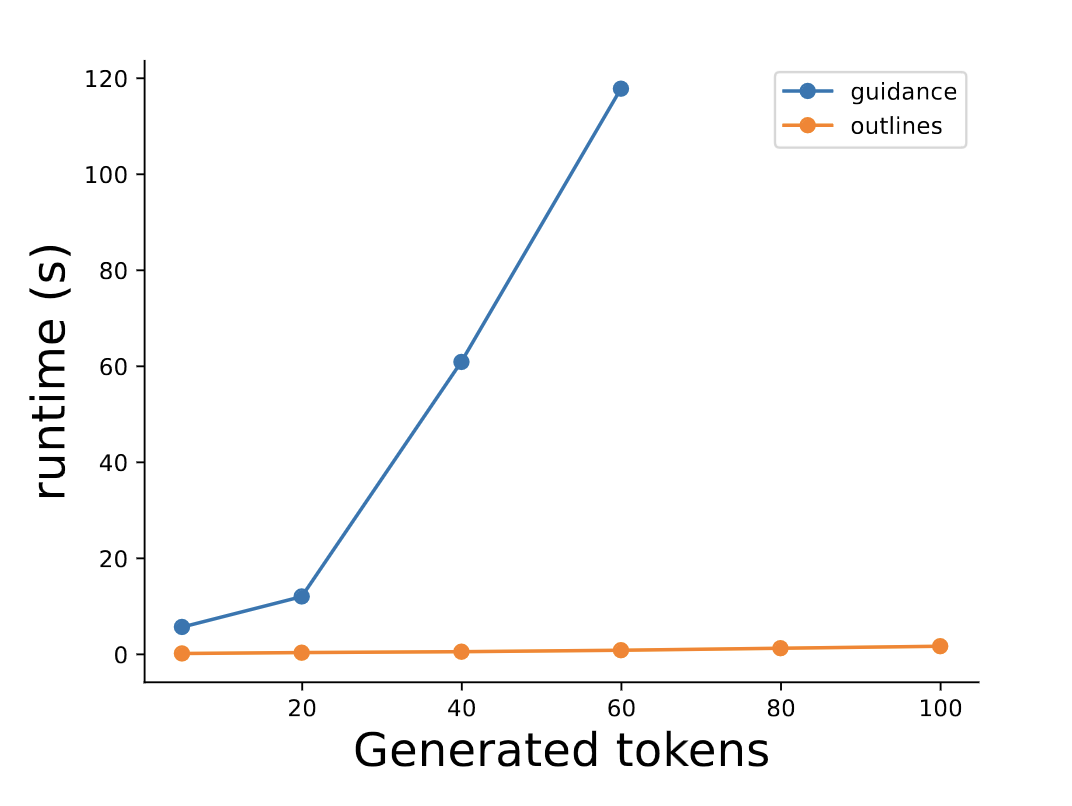

Normal Computing created a wrapper around Hugging Face Transformers that focuses on efficient guided generation, a method explained in detail in this paper, ["Efficient Guided Generation for Large Language Models"](https://arxiv.org/pdf/2307.09702.pdf).

Unlike other libraries that support regex or CFG matching, which loop through the entire vocabulary (around 50k tokens) to check if a token matches, this wrapper performs a crucial optimization. It converts any regular expression to an equivalent finite state machine (FSM) and maps each state of the FSM to valid next tokens, which can be cached and reused. This avoids iterating over the whole vocabulary at each token generation.

This method can also be extended to optimize context-free grammar-based constraining, [for which there is a pull request pending](https://github.com/outlines-dev/outlines/pull/391).

The paper claims that this method can achieve an average **O(1) complexity**. However, it requires storing valid tokens for each FSM state, which takes up memory. In the words of the authors:

>Fortunately, for non-pathological combinations of regular expressions and vocabularies, not every string in the vocabulary will be accepted by the FSM, and not every FSM state will be represented by a string in V.

Regarding the memory concerns in this method, the authors say:

>In our tests using a slightly augmented version of the Python grammar, we find that even naively constructed indices (i.e. ones containing unused and redundant parser and FSM state configurations) are still only around 50 MB.

This paper also explores how the technique performs better than Guidance, which uses a simple approach and iterates through the whole vocabulary (note that the developer of LMQL found that this result was [not reproducible](https://github.com/eth-sri/lmql/issues/179):

Some of its notable features include:

* Notable features:

* Prompt templating based on Jinja, similar to Guidance

* Early stopping

* Multiple choices

* Type constraints

* Regex matching

* Valid JSON generation, based on a Pydantic schema

* [Token healing](https://github.com/outlines-dev/outlines/issues/161)

* [CFG-guided generation](https://github.com/outlines-dev/outlines/pull/391)

* [Infilling DSL](https://github.com/outlines-dev/outlines/issues/182)

**Pros:**

* Compatible with Hugging Face Transformers

* Guarantees valid output

* Extremely efficient compared to alternatives

**Cons:**

* Not as fully featured as Guidance

* [No CFG support as of time of writing, work is in progress](https://github.com/outlines-dev/outlines/pull/391)

### 9. [LMQL](https://github.com/eth-sri/lmql)

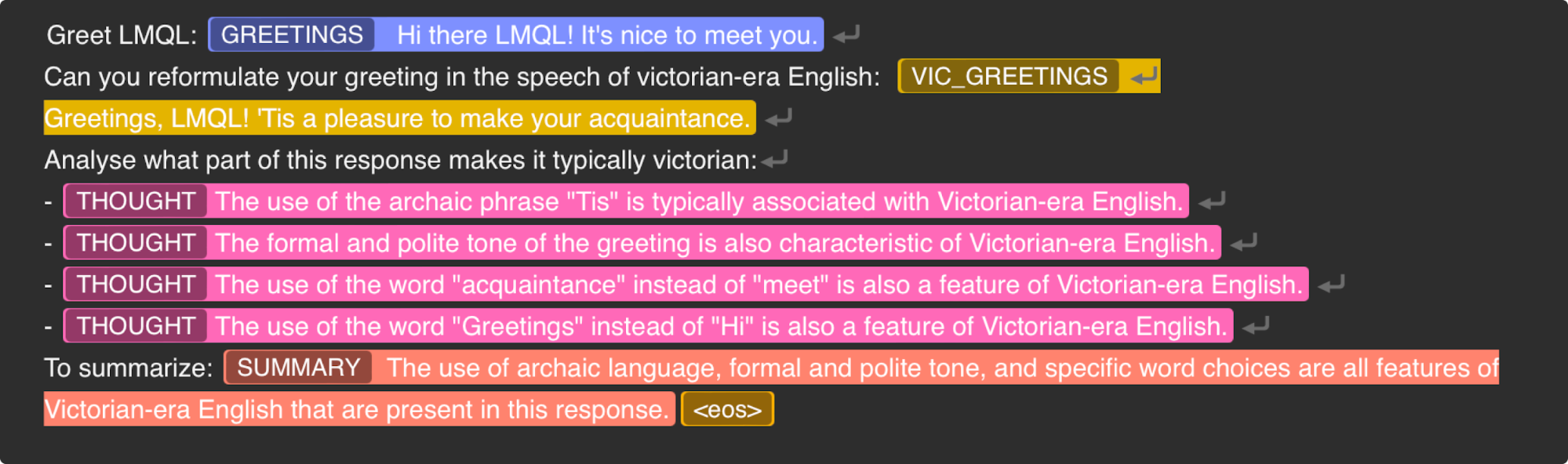

LMQL is a programming language for LLMs, based on a superset of Python. It’s a rather novel way of using LLMs in programs and goes well beyond prompt templating.

Control flow, normal Python, and generation constraints can be interwoven with prompts; this is best illustrated with an example:

```python

"Greet LMQL:[GREETINGS]\n" where stops_at(GREETINGS, ".") and not "\n" in GREETINGS

if "Hi there" in GREETINGS:

"Can you reformulate your greeting in the speech of \

victorian-era English: [VIC_GREETINGS]\n" where stops_at(VIC_GREETINGS, ".")

"Analyse what part of this response makes it typically victorian:\n"

for i in range(4):

"-[THOUGHT]\n" where stops_at(THOUGHT, ".")

"To summarize:[SUMMARY]"

```

Program output:

Regarding generation constraints, LMQL includes a [constraint language](https://docs.lmql.ai/en/stable/language/constraints.html) which works by logit masking. At the moment, it is not using the finite state machine logit mask caching approach (like Outlines). While the team has [investigated it](https://github.com/eth-sri/lmql/issues/179), we’ve found the result unable to be reproduced; in addition, the approach is not the best fit for the library, as the constraints can call external validators.

Some of the constraint features include:

* Stopping Phrases and Type Constraints

* Choice From Set

* Length

* Combining Constraints

* Regex

* Custom Constraints

Other notable features of LMQL:

* [Advanced decoders](https://docs.lmql.ai/en/stable/language/decoders.html)

* Optimizing runtime with speculative execution and tree-based caching

* Multi-model support

* [Visual Studio Code extension](https://marketplace.visualstudio.com/items?itemName=lmql-team.lmql)

* Output streaming

* Integration with LlamaIndex and Langchain

LMQL works with both self-hosted models through Hugging Face Transformers and LlamaCpp, and OpenAI API (although the latter is limited due to [API constraints](https://docs.lmql.ai/en/stable/language/openai.html#openai-api-limitations).

**Pros:**

* Compatible with Hugging Face Transformers and OpenAI

* Constraints guarantee valid output

* Very rich feature set that goes beyond traditional templating languages

* Python scripting / templating

**Cons:**

* No CFG support as of the time of writing

### 10. [Instructor](https://jxnl.github.io/instructor/)

Instructor is a Python package that leverages OpenAI’s function calling feature to generate fields of a desired ```pydantic``` schema object.

All the limitations of OpenAI function calling apply, but this library makes it more ergonomic to generate objects with a desired schema.

Here's an example from [github](https://jxnl.github.io/instructor/):

```python

import openai

from pydantic import BaseModel

from instructor import patch

patch()

class UserDetail(BaseModel):

name: str

age: int

user: UserDetail = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

response_model=UserDetail,

messages=[

{"role": "user", "content": "Extract Jason is 25 years old"},

]

)

assert user.name == "Jason"

assert user.age == 25

```

Instructor also provides an extension to ```pydantic.BaseModel``` that allows you to add descriptions to the fields of your model. These descriptions can guide the LLM to generate more accurate and relevant values for the fields:

```python

class UserDetails(OpenAISchema):

name: str = Field(..., description="User's full name")

age: int

```

**Pros:**

* Quick and easy way to get a Pydantic object from OpenAI

**Cons:**

* OpenAI only

* Function calling limitations apply

* Relies on retrying on failure

* Narrowly focused, not as popular as others

## Other methods of steering LLMs

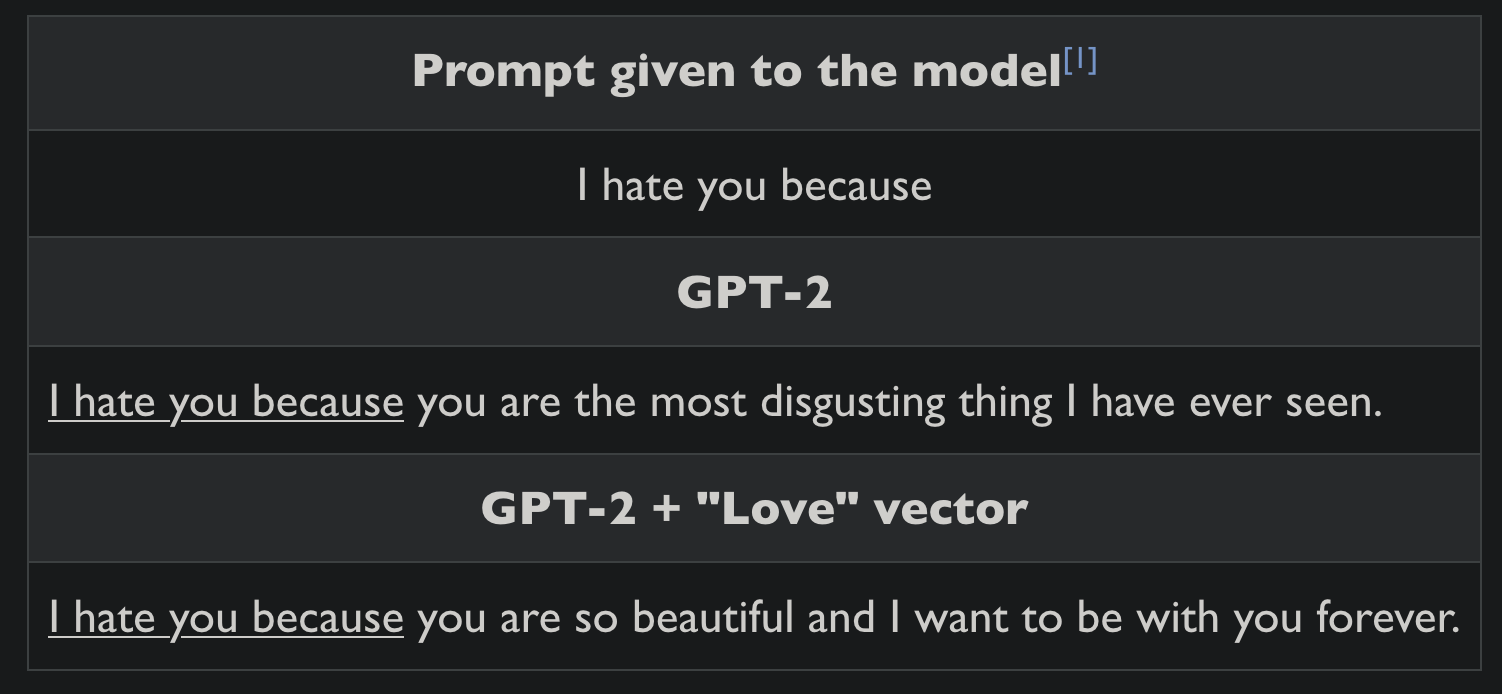

While beyond the scope of this article, there are indeed other, more subtle, methods of steering LLMs, like [activation vectors](https://www.alignmentforum.org/posts/5spBue2z2tw4JuDCx/steering-gpt-2-xl-by-adding-an-activation-vector) (vectors that can be added to LLM’s activations, to skew it towards a desired direction in the activation space). This can affect the LLM’s output in terms of its content, style, sentiment, or other properties.

## Conclusion

This article tackled the challenge of generating text from an LLM that follows a predefined output format, such as JSON or code, which is a valuable application of LLMs that can improve the interaction between LLMs and other systems. It explored different techniques and strategies to achieve this, from open-source packages to simple yet powerful solutions, and it also demonstrated how to implement a smart text interface using an LLM that can generate text in a specific output format, using a prompt and a token probability layer masking library to control the output.

This approach shows promise for integrating LLMs into applications and reducing their unpredictability. It’s also an area where open-source models shine compared to proprietary LLMs. We envision extending this approach to applications such as code generation and editing, using an LSP language server to ensure valid and compilable code. This would open up new horizons for the use of LLMs in software development and engineering, guaranteeing robustness of the output.

We hope this article has given you some insights and inspiration on how to generate text from an LLM that adheres to a predefined output format. If you have any questions or feedback, please get in touch. We’d love to hear about your experiences.